how can I self host an llm

Asked on 2024-07-30

1 search

To self-host a large language model (LLM) on your local machine, you can follow a process similar to the one described in the WWDC sessions for hosting a Swift service. Here are the steps you can take:

-

Set Up Your Development Environment:

- Install the necessary tools and SDKs for your development environment. For Swift, you would install the Swift SDK. For machine learning models, you might need Python and relevant libraries like PyTorch or TensorFlow.

-

Build and Test Locally:

- Develop your service locally and test it to ensure it works as expected. For Swift, you can use the

swift buildcommand to compile your service. For machine learning models, you can use Python scripts to test your model.

- Develop your service locally and test it to ensure it works as expected. For Swift, you can use the

-

Cross-Compile for Target Environment:

- If you need to run your service on a different operating system (e.g., from macOS to Linux), you can cross-compile your code. For Swift, you can use the fully static Linux SDK to cross-compile your service. This involves specifying the target environment and linking against the necessary libraries to produce a statically linked binary.

-

Deploy to Target Environment:

- Copy the compiled binary or model to your target environment. For Swift, you would copy the binary to a Linux server. For machine learning models, you would deploy the model file to the server where it will be hosted.

-

Run and Test on Target Environment:

- Start your service on the target environment and test it to ensure it is running correctly. For Swift, you can use terminal commands to start the service and make requests to it. For machine learning models, you can use a web server framework like Flask or FastAPI to serve the model and test it with HTTP requests.

For more detailed steps on cross-compiling and deploying a Swift service, you can refer to the session "What’s new in Swift" at the 09:20 mark.

For building a service using Swift on the server, you can refer to the session "Explore the Swift on Server ecosystem" at the 02:25 mark.

If you are interested in integrating machine learning models with Apple Silicon, you can refer to the session "Bring your machine learning and AI models to Apple silicon" at the 01:00 mark.

Relevant Sessions:

Explore the Swift on Server ecosystem

Swift is a great language for writing your server applications, and powers critical services across Apple’s cloud products. We’ll explore tooling, delve into the Swift server package ecosystem, and demonstrate how to interact with databases and add observability to applications.

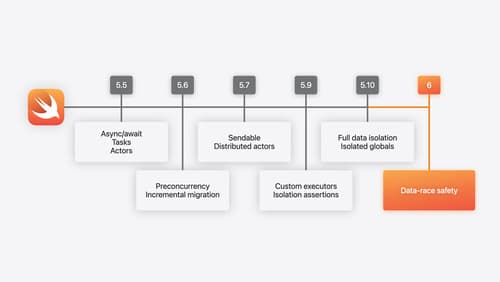

What’s new in Swift

Join us for an update on Swift. We’ll briefly go through a history of Swift over the past decade, and show you how the community has grown through workgroups, expanded the package ecosystem, and increased platform support. We’ll introduce you to a new language mode that achieves data-race safety by default, and a language subset that lets you run Swift on highly constrained systems. We’ll also explore some language updates including noncopyable types, typed throws, and improved C++ interoperability.

Bring your machine learning and AI models to Apple silicon

Learn how to optimize your machine learning and AI models to leverage the power of Apple silicon. Review model conversion workflows to prepare your models for on-device deployment. Understand model compression techniques that are compatible with Apple silicon, and at what stages in your model deployment workflow you can apply them. We’ll also explore the tradeoffs between storage size, latency, power usage and accuracy.