How to use llama on device?

Asked on 2024-07-25

1 search

To use Llama on device, you can follow these steps as outlined in the WWDC sessions:

-

Model Preparation and Integration:

- Convert your model into the Core ML format, which is optimized for execution on Apple Silicon. This allows your app to leverage the power of Apple Silicon's unified memory, CPU, GPU, and neural engine for low latency and efficient compute. For more details, you can refer to the session Bring your machine learning and AI models to Apple silicon.

-

Using ExecuTorch:

- Build your app and choose the model to use along with the corresponding tokenizer. For example, you can use the Llama 2 prompt template for your queries. This model can run locally on your device through ExecuTorch. For a practical demonstration, you can refer to the session Train your machine learning and AI models on Apple GPUs.

-

Running Models on Device:

- You can run a wide array of models, including large language models like Llama, on Apple devices. This involves a few steps to get the model ready to run in your app. For more information, you can check out the session Explore machine learning on Apple platforms.

-

Core ML Integration:

- Core ML makes it easy to integrate and use your model in your app. It is tightly integrated with Xcode and offers a unified API for performing on-device inference across a wide range of machine learning and AI model types. For a detailed guide, you can refer to the session Deploy machine learning and AI models on-device with Core ML.

By following these steps, you can efficiently deploy and run Llama on your Apple device.

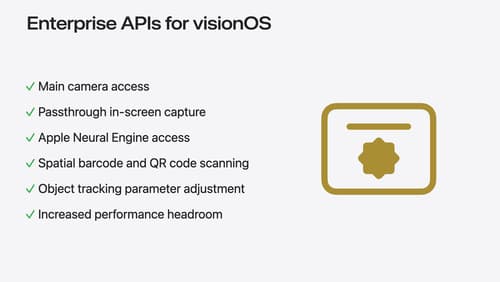

Introducing enterprise APIs for visionOS

Find out how you can use new enterprise APIs for visionOS to create spatial experiences that enhance employee and customer productivity on Apple Vision Pro.

Deploy machine learning and AI models on-device with Core ML

Learn new ways to optimize speed and memory performance when you convert and run machine learning and AI models through Core ML. We’ll cover new options for model representations, performance insights, execution, and model stitching which can be used together to create compelling and private on-device experiences.

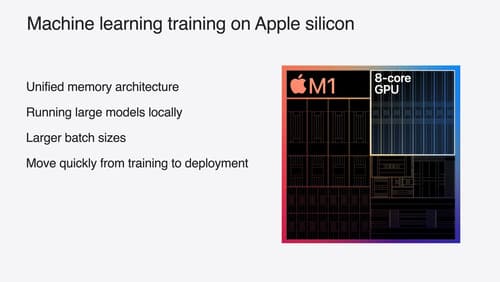

Train your machine learning and AI models on Apple GPUs

Learn how to train your models on Apple Silicon with Metal for PyTorch, JAX and TensorFlow. Take advantage of new attention operations and quantization support for improved transformer model performance on your devices.