How to do object tracking in visionOS?

Asked on 2024-07-26

1 search

To do object tracking in visionOS, you can follow these steps:

-

Create a Reference Object:

- Use the CreateML app to create a reference object. This involves configuring a training session with your USDZ assets, training your ML model locally on your Mac, and saving the reference object. This process is detailed in the session Explore object tracking for visionOS.

-

Load the Reference Object:

- Load the reference object at runtime using a file URL or from a bundle. This can be done using the new API, as shown in the session Create enhanced spatial computing experiences with ARKit.

-

Set Up Object Tracking Configuration:

- Configure the object tracking parameters such as the maximum number of objects to track, tracking rate for static and dynamic objects, and detection rate. This is covered in the session Introducing enterprise APIs for visionOS.

-

Run the Object Tracking Provider:

- Use the object tracking provider on an ARKit session. After the data provider enters a running state, start processing incoming tracking results. These results will be delivered as object anchors, which include the position and orientation of each tracked item. This is explained in the session Create enhanced spatial computing experiences with ARKit.

-

Anchor Virtual Content:

- Use the object anchors to attach virtual content to the tracked real-world items. This allows for interactive and immersive experiences, as described in the session Explore object tracking for visionOS.

By following these steps, you can effectively implement object tracking in your visionOS app, enabling new dimensions of interactivity and spatial computing experiences.

Explore object tracking for visionOS

Find out how you can use object tracking to turn real-world objects into virtual anchors in your visionOS app. Learn how you can build spatial experiences with object tracking from start to finish. Find out how to create a reference object using machine learning in Create ML and attach content relative to your target object in Reality Composer Pro, RealityKit or ARKit APIs.

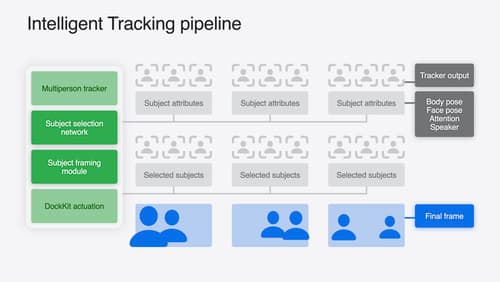

What’s new in DockKit

Discover how intelligent tracking in DockKit allows for smoother transitions between subjects. We will cover what intelligent tracking is, how it uses an ML model to select and track subjects, and how you can use it in your app.

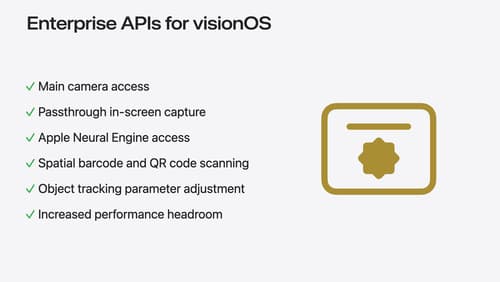

Introducing enterprise APIs for visionOS

Find out how you can use new enterprise APIs for visionOS to create spatial experiences that enhance employee and customer productivity on Apple Vision Pro.