how to build models for deployment on iphones

Asked on 2024-07-31

1 search

To build models for deployment on iPhones, you can follow a structured workflow that involves three main phases: training, preparation, and integration. Here’s a detailed breakdown of each phase:

-

Training:

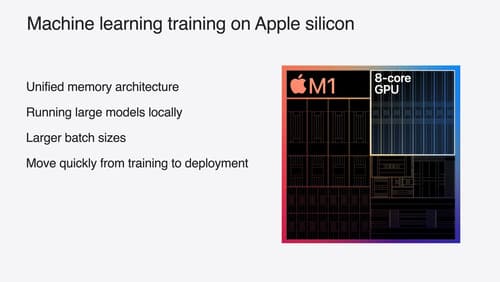

- Define Model Architecture and Train: Start by defining the model architecture and training it using appropriate training data. You can leverage Apple silicon and the unified memory architecture on Mac to train high-performance models using popular libraries like PyTorch, TensorFlow, and JAX. For more details, you can refer to the session Train your machine learning and AI models on Apple GPUs.

-

Preparation:

- Convert and Optimize: Once the model is trained, convert it into the Core ML format using Core ML tools. This step also involves optimizing the model for Apple hardware using various compression techniques. Core ML tools provide utilities to optimize and convert models for use with Apple frameworks, ensuring efficient execution on Apple silicon. For more information, you can check out the session Bring your machine learning and AI models to Apple silicon.

-

Integration:

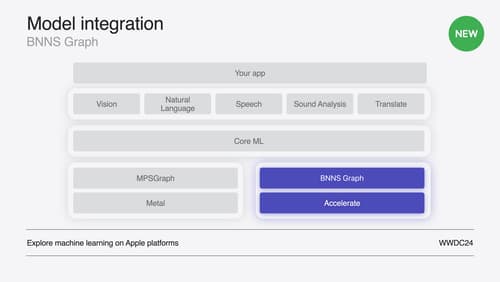

- Integrate with Apple Frameworks: Finally, write code to integrate the prepared model with Apple frameworks to load and execute it within your app. Core ML is the primary framework used for deploying models on Apple devices, and it automatically segments models across CPU, GPU, and neural engine to maximize hardware utilization. For a detailed look at this phase, you can watch the session Deploy machine learning and AI models on-device with Core ML.

Relevant Sessions:

- Explore machine learning on Apple platforms

- Support real-time ML inference on the CPU

- Train your machine learning and AI models on Apple GPUs

- Bring your machine learning and AI models to Apple silicon

- Deploy machine learning and AI models on-device with Core ML

By following these steps and utilizing the resources provided in the sessions, you can effectively build and deploy machine learning models on iPhones.

Support real-time ML inference on the CPU

Discover how you can use BNNSGraph to accelerate the execution of your machine learning model on the CPU. We will show you how to use BNNSGraph to compile and execute a machine learning model on the CPU and share how it provides real-time guarantees such as no runtime memory allocation and single-threaded running for audio or signal processing models.

Train your machine learning and AI models on Apple GPUs

Learn how to train your models on Apple Silicon with Metal for PyTorch, JAX and TensorFlow. Take advantage of new attention operations and quantization support for improved transformer model performance on your devices.

Explore machine learning on Apple platforms

Get started with an overview of machine learning frameworks on Apple platforms. Whether you’re implementing your first ML model, or an ML expert, we’ll offer guidance to help you select the right framework for your app’s needs.