What’s new in avfoundation

Asked on 2024-07-31

1 search

What's New in AVFoundation

1. Spatial Media Integration

- Recording Spatial Video: AVFoundation now supports recording spatial video, leveraging the new camera setup on the iPhone 15 Pro. This involves using the AV capture session, device input, and movie file output to manage the data flow and write it to disk. The process is similar to recording 2D video but with additional steps to enable spatial video recording.

- Playback of Spatial Media: AVFoundation can now detect and load spatial media assets. This includes support in PhotoPicker and PhotoKit for filtering and presenting spatial assets.

2. Media Performance Metrics

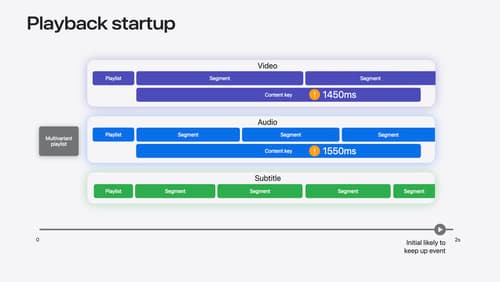

- New Metrics System: In iOS 18, AVFoundation introduces a new way to gather media performance metrics represented as events. This helps in triaging issues like playback start delays and stalling by providing client-side metrics, which were previously available through access logs, error logs, and various AV player notifications.

3. Multiview Video Playback in visionOS

- Multiview Architecture: AVFoundation supports multiview video playback in visionOS, allowing simultaneous viewing of multiple video streams. This is integrated with AVKit and RealityKit to provide a high-quality, customizable viewing experience.

Relevant Sessions

- Build compelling spatial photo and video experiences

- Discover media performance metrics in AVFoundation

- Explore multiview video playback in visionOS

Chapter Markers

-

Build compelling spatial photo and video experiences

- 0 = Introduction

- 67 = Types of stereoscopic experiences

- 253 = Tour of the new APIs

- 794 = Deep dive into spatial media formats

-

Discover media performance metrics in AVFoundation

- 0 = Introduction

- 93 = What are events?

- 336 = Subscribing to events

-

Explore multiview video playback in visionOS

- 0 = Introduction

- 115 = Introducing multiview

- 299 = Design a multiview experience

Platforms State of the Union

Discover the newest advancements on Apple platforms.

Discover Swift enhancements in the Vision framework

The Vision Framework API has been redesigned to leverage modern Swift features like concurrency, making it easier and faster to integrate a wide array of Vision algorithms into your app. We’ll tour the updated API and share sample code, along with best practices, to help you get the benefits of this framework with less coding effort. We’ll also demonstrate two new features: image aesthetics and holistic body pose.

Discover media performance metrics in AVFoundation

Discover how you can monitor, analyze, and improve user experience with the new media performance APIs. Explore how to monitor AVPlayer performance for HLS assets using different AVMetricEvents, and learn how to use these metrics to understand and triage player performance issues.