What is object capture

Asked on 2024-07-31

1 search

Object capture is a technology introduced by Apple that allows users to create 3D models of real-world objects using an iPhone or iPad. This process involves capturing multiple images of an object from different angles, which are then processed to reconstruct a detailed 3D model. Object capture works best on movable objects in controlled indoor environments where you have space to walk all the way around and capture every angle.

Apple has also introduced an "area mode" for object capture, which allows for the capture of uneven terrain outdoors, objects that you can't walk all the way around, or complex surfaces. This mode is ideal for creating models for use in 3D environments or artistic projects on Apple Vision Pro.

For more detailed information, you can refer to the session Discover area mode for Object Capture.

Relevant Sessions

Create enhanced spatial computing experiences with ARKit

Learn how to create captivating immersive experiences with ARKit’s latest features. Explore ways to use room tracking and object tracking to further engage with your surroundings. We’ll also share how your app can react to changes in your environment’s lighting on this platform. Discover improvements in hand tracking and plane detection which can make your spatial experiences more intuitive.

Discover area mode for Object Capture

Discover how area mode for Object Capture enables new 3D capture possibilities on iOS by extending the functionality of Object Capture to support capture and reconstruction of an area. Learn how to optimize the quality of iOS captures using the new macOS sample app for reconstruction, and find out how to view the final results with Quick Look on Apple Vision Pro, iPhone, iPad or Mac. Learn about improvements to 3D reconstruction, including a new API that allows you to create your own custom image processing pipelines.

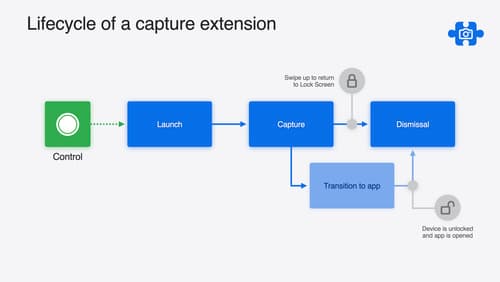

Build a great Lock Screen camera capture experience

Find out how the LockedCameraCapture API can help you bring your capture application’s most useful information directly to the Lock Screen. Examine the API’s features and functionality, learn how to get started creating a capture extension, and find out how that extension behaves when the device is locked.