How to create an HLS live streaming on iOS?

Asked on 2024-07-27

1 search

To create an HLS live streaming on iOS, you can follow these general steps:

-

Set Up Your Streaming Server: You need a server that supports HLS (HTTP Live Streaming). This server will segment your video into small chunks and create a playlist file that points to these chunks.

-

Capture Video: Use AVFoundation to capture video from the device's camera. You can create an

AVCaptureSessionand configure it with the appropriate input (camera) and output (movie file output). -

Encode Video: Encode the captured video into H.264 format, which is required for HLS. You can use

AVAssetWriterandAVAssetWriterInputto encode the video. -

Segment Video: Segment the encoded video into small chunks (typically 10 seconds each). This can be done using

AVAssetExportSessionwith theAVAssetExportPresetAppleM4Vpreset. -

Create Playlist: Create an M3U8 playlist file that lists the video segments. This file will be used by the HLS player to stream the video.

-

Upload Segments and Playlist: Upload the video segments and the playlist file to your streaming server.

-

Play the Stream: Use

AVPlayerto play the HLS stream in your iOS app. You can initializeAVPlayerwith the URL of the M3U8 playlist.

For more detailed information on capturing and encoding video, you can refer to the session Build compelling spatial photo and video experiences (00:05:21) from WWDC 2024, which covers the basics of using AVFoundation for video capture and encoding.

Additionally, if you are interested in enhancing your HLS stream with interstitials (ads or other auxiliary content), you can check out the session Enhance ad experiences with HLS interstitials (00:00:57) for more information on how to insert interstitials into your HLS stream.

Relevant Sessions:

If you need more specific details or code examples, please let me know!

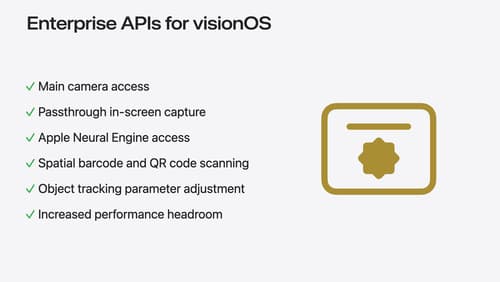

Introducing enterprise APIs for visionOS

Find out how you can use new enterprise APIs for visionOS to create spatial experiences that enhance employee and customer productivity on Apple Vision Pro.

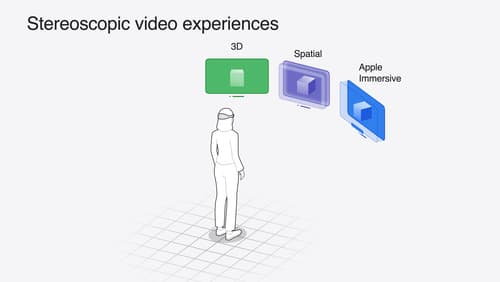

Build compelling spatial photo and video experiences

Learn how to adopt spatial photos and videos in your apps. Explore the different types of stereoscopic media and find out how to capture spatial videos in your iOS app on iPhone 15 Pro. Discover the various ways to detect and present spatial media, including the new QuickLook Preview Application API in visionOS. And take a deep dive into the metadata and stereo concepts that make a photo or video spatial.

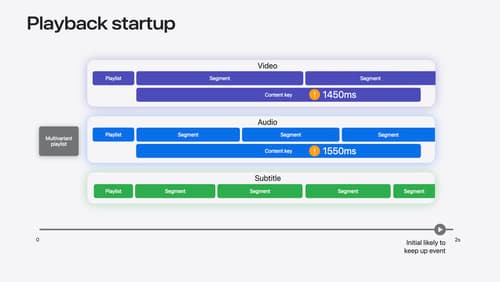

Discover media performance metrics in AVFoundation

Discover how you can monitor, analyze, and improve user experience with the new media performance APIs. Explore how to monitor AVPlayer performance for HLS assets using different AVMetricEvents, and learn how to use these metrics to understand and triage player performance issues.