What new features are the in the Visionos 2 SDK?

Asked on 2024-07-31

1 search

The VisionOS 2 SDK introduces several new features to enhance the development of spatial computing applications. Here are some of the key updates:

- Hand Positioning: Developers can now decide if the user's hands appear in front of or behind the content, offering more creative control over the app experience.

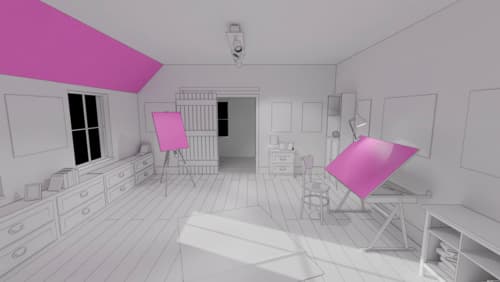

- Enhanced Scene Understanding: The fidelity of scene understanding capabilities has been significantly extended. Planes can now be detected in all orientations, allowing for anchoring objects on various surfaces. Additionally, room anchors can consider the user's surroundings on a per-room basis, and user movement across rooms can be detected.

- Object Tracking API: A new object tracking API allows developers to attach content to individual objects found around the user. This enables attaching virtual content, like instructions, to physical objects for new dimensions of interactivity.

- Known Object Tracking: Enhanced known object tracking through parameter adjustment allows apps to detect and track specific reference objects within their viewing area. This feature includes configurable parameters to optimize object tracking for different use cases, such as changing the maximum number of objects tracked and adjusting the tracking rate for static and dynamic objects.

For more details, you can refer to the Platforms State of the Union session.

Relevant Sessions

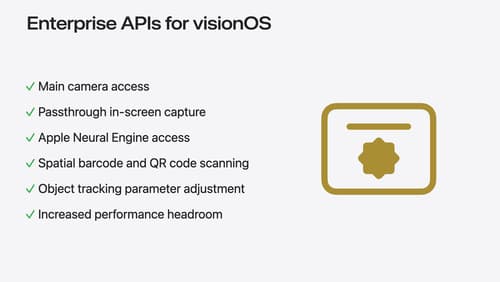

Introducing enterprise APIs for visionOS

Find out how you can use new enterprise APIs for visionOS to create spatial experiences that enhance employee and customer productivity on Apple Vision Pro.

Platforms State of the Union

Discover the newest advancements on Apple platforms.

Create enhanced spatial computing experiences with ARKit

Learn how to create captivating immersive experiences with ARKit’s latest features. Explore ways to use room tracking and object tracking to further engage with your surroundings. We’ll also share how your app can react to changes in your environment’s lighting on this platform. Discover improvements in hand tracking and plane detection which can make your spatial experiences more intuitive.