whats new with siri?

Asked on 2024-07-31

1 search

Apple has introduced several exciting updates to Siri at WWDC 2024, focusing on improvements driven by Apple Intelligence and new large language models. Here are the key highlights:

-

Natural Language Processing: Siri can now understand and respond more naturally, even if you stumble over your words. This is due to advancements in large language models (Bring your app to Siri).

-

Contextual Awareness: Siri is now more contextually relevant and personal. It can understand what you're looking at on your screen and take appropriate actions (Bring your app to Siri).

-

Semantic Search: Siri can perform semantic searches, meaning it understands the context of your queries better. For example, searching for "pets" will bring up related content like cats, dogs, and even snakes (Bring your app to Siri).

-

App Integration: Siri can now invoke items from your app's menus and access text displayed in any app using standard text systems. This allows users to reference and act on text visible on the screen (Platforms State of the Union).

-

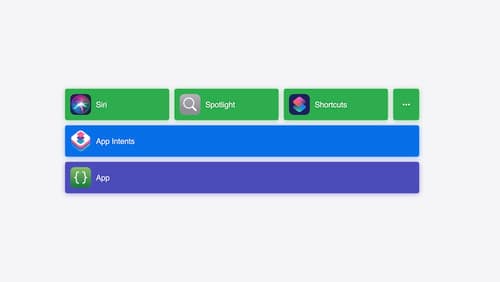

App Intents and SiriKit: Apple has enhanced the app intents framework and SiriKit, making it easier for developers to integrate their apps with Siri. New APIs called app intent domains have been introduced, which are collections of app intent-based APIs designed for specific functionalities like books, camera, or spreadsheets (Bring your app to Siri).

-

Spotlight API: Siri can now search data from your app using a new Spotlight API, enabling app entities to be included in its index. This allows for deeper and more natural access to your app's data and capabilities (Platforms State of the Union).

For a detailed overview, you can watch the session Bring your app to Siri starting at the "What's new with Siri" chapter.

Platforms State of the Union

Discover the newest advancements on Apple platforms.

Bring your app to Siri

Learn how to use App Intents to expose your app’s functionality to Siri. Understand which intents are already available for your use, and how to create custom intents to integrate actions from your app into the system. We’ll also cover what metadata to provide, making your entities searchable via Spotlight, annotating onscreen references, and much more.

Bring your app’s core features to users with App Intents

Learn the principles of the App Intents framework, like intents, entities, and queries, and how you can harness them to expose your app’s most important functionality right where people need it most. Find out how to build deep integration between your app and the many system features built on top of App Intents, including Siri, controls and widgets, Apple Pencil, Shortcuts, the Action button, and more. Get tips on how to build your App Intents integrations efficiently to create the best experiences in every surface while still sharing code and core functionality.