can i access an llm model via the api

Asked on 2024-07-31

1 search

Yes, you can access and deploy machine learning models on Apple devices using various APIs and frameworks provided by Apple. Here are some relevant sessions from WWDC that discuss this:

-

Deploy machine learning and AI models on-device with Core ML:

- This session covers how to efficiently deploy and run machine learning and AI models on-device using Core ML. It introduces features like ML Tensor, stateful models, and multifunction models to simplify model integration and improve inference efficiency.

-

Explore machine learning on Apple platforms:

- This session discusses Apple's ML-powered APIs and tools like CreateML for customizing models. It also covers how to use Core ML for deploying models on-device and introduces other frameworks like Metal Performance Shaders (MPS) Graph and BNNs Graph for more control over ML task execution.

-

Bring your machine learning and AI models to Apple silicon:

- This session explains how to optimize and convert your models for use with Apple frameworks, leveraging the power of Apple Silicon's unified memory, CPU, GPU, and neural engine for efficient on-device ML workloads.

-

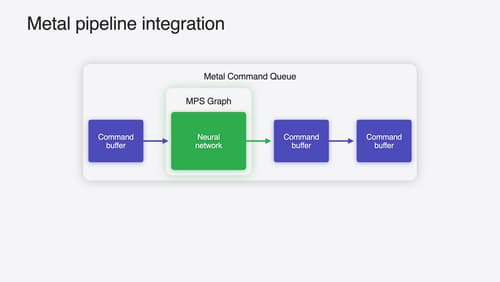

Accelerate machine learning with Metal:

- This session focuses on improving the efficiency of transformer models and introduces new features in MPS Graph for efficient execution of ML models on the GPU.

These sessions provide comprehensive guidance on how to access and deploy machine learning models on Apple devices using the provided APIs and frameworks.

Accelerate machine learning with Metal

Learn how to accelerate your machine learning transformer models with new features in Metal Performance Shaders Graph. We’ll also cover how to improve your model’s compute bandwidth and quality, and visualize it in the all new MPSGraph viewer.

Deploy machine learning and AI models on-device with Core ML

Learn new ways to optimize speed and memory performance when you convert and run machine learning and AI models through Core ML. We’ll cover new options for model representations, performance insights, execution, and model stitching which can be used together to create compelling and private on-device experiences.

Bring your machine learning and AI models to Apple silicon

Learn how to optimize your machine learning and AI models to leverage the power of Apple silicon. Review model conversion workflows to prepare your models for on-device deployment. Understand model compression techniques that are compatible with Apple silicon, and at what stages in your model deployment workflow you can apply them. We’ll also explore the tradeoffs between storage size, latency, power usage and accuracy.