how do it use audio toolbox?

Asked on 2024-07-31

1 search

To use Audio Toolbox, you can refer to the session "Enhance your spatial computing app with RealityKit audio" from WWDC 2024. This session provides detailed steps on how to configure and use audio units within RealityKit. Here are some key points:

- Instantiate the Audio Unit: Create an instance of the audio unit you want to use.

- Prepare Configuration: Set up the configuration for the audio generator controller.

- Create Output Format: Define the format for the output of the audio unit and set it on the audio unit's output bus.

- Allocate Render Resources: Allocate the necessary render resources for the audio unit.

- Capture Render Block: Capture the audio unit's internal render block.

- Configure Audio Generator Controller: Use the audio generator configuration to set up the audio generator controller.

- Call Render Block: Call the audio unit's internal render block on the audio data provided in the audio generator's callback.

For a more detailed walkthrough, you can watch the session Enhance your spatial computing app with RealityKit audio (09:37).

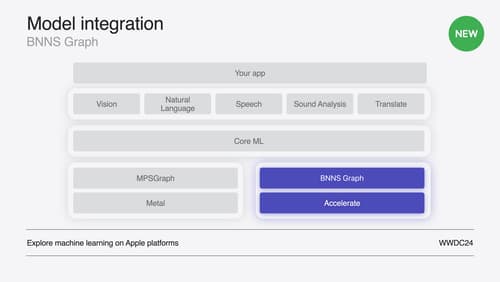

Additionally, if you are interested in real-time processing and using machine learning with audio units, the session "Support real-time ML inference on the CPU" covers how to create an audio unit project that adopts BNNS graph for real-time audio processing. You can start with the chapter on Real-time processing (06:47).

Relevant Sessions:

Support real-time ML inference on the CPU

Discover how you can use BNNSGraph to accelerate the execution of your machine learning model on the CPU. We will show you how to use BNNSGraph to compile and execute a machine learning model on the CPU and share how it provides real-time guarantees such as no runtime memory allocation and single-threaded running for audio or signal processing models.

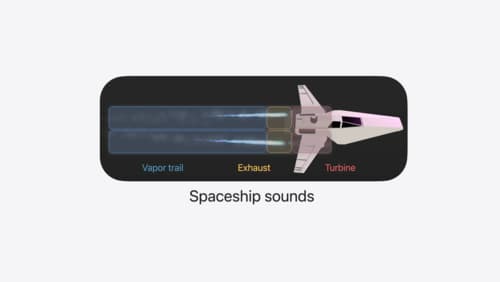

Enhance your spatial computing app with RealityKit audio

Elevate your spatial computing experience using RealityKit audio. Discover how spatial audio can make your 3D immersive experiences come to life. From ambient audio, reverb, to real-time procedural audio that can add character to your 3D content, learn how RealityKit audio APIs can help make your app more engaging.

Compose interactive 3D content in Reality Composer Pro

Discover how the Timeline view in Reality Composer Pro can bring your 3D content to life. Learn how to create an animated story in which characters and objects interact with each other and the world around them using inverse kinematics, blend shapes, and skeletal poses. We’ll also show you how to use built-in and custom actions, sequence your actions, apply triggers, and implement natural movements.