scan images

Asked on 2024-07-31

1 search

To scan images, Apple has introduced several enhancements in the Vision framework, as discussed in the session Discover Swift enhancements in the Vision framework. Here are some key points:

-

Barcode Scanning: You can configure the barcode scanning request to scan for specific types of barcodes, such as EAN-13, which is commonly used in grocery stores. This optimization improves performance by focusing on relevant barcode types.

-

Concurrency for Image Processing: To process multiple images efficiently, you can use Swift concurrency. This allows you to handle batches of images simultaneously, improving performance. For example, you can use task groups to process images in parallel, limiting the number of concurrent tasks to manage memory usage effectively.

-

Saliency Image Request: To crop images to their main subjects, you can use the

generateObjectnessBasedSaliencyImageRequest. This request identifies the main subjects in an image, allowing you to create focused crops around them. -

Image Aesthetic Scores: A new request called

calculateImageAestheticScoresRequestcan assess image quality and find memorable photos by analyzing factors like blur and exposure. This can help in filtering out utility images, such as screenshots or photos of receipts, which are not particularly memorable.

For more detailed information, you can refer to the chapter markers in the session Discover Swift enhancements in the Vision framework:

- Introduction: 0

- New Vision API: 67

- Get started with Vision: 107

- Optimize with Swift Concurrency: 539

- Update an existing Vision app: 665

- What’s new in Vision?: 826

Additionally, if you are working with HDR images, the session Use HDR for dynamic image experiences in your app covers the full HDR pipeline, including reading, editing, displaying, and writing HDR images. This session provides strategies for handling HDR images to ensure they look great across different displays and environments.

For more information on these topics, you can explore the respective sessions and their chapters.

Discover area mode for Object Capture

Discover how area mode for Object Capture enables new 3D capture possibilities on iOS by extending the functionality of Object Capture to support capture and reconstruction of an area. Learn how to optimize the quality of iOS captures using the new macOS sample app for reconstruction, and find out how to view the final results with Quick Look on Apple Vision Pro, iPhone, iPad or Mac. Learn about improvements to 3D reconstruction, including a new API that allows you to create your own custom image processing pipelines.

Discover Swift enhancements in the Vision framework

The Vision Framework API has been redesigned to leverage modern Swift features like concurrency, making it easier and faster to integrate a wide array of Vision algorithms into your app. We’ll tour the updated API and share sample code, along with best practices, to help you get the benefits of this framework with less coding effort. We’ll also demonstrate two new features: image aesthetics and holistic body pose.

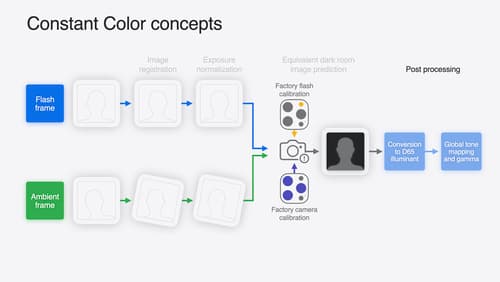

Keep colors consistent across captures

Meet the Constant Color API and find out how it can help people use your app to determine precise colors. You’ll learn how to adopt the API, explore its scientific and marketing potential, and discover best practices for making the most of the technology.