How do I embed location information

Asked on 2024-07-31

1 search

To embed location information as anchors in your app, you can use the spatial tracking session API in RealityKit. This allows you to provide your app access to the 3D location of anchors, enabling users to authorize which anchor capabilities they want to track. Here are the steps to achieve this:

- Create a Spatial Tracking Session: First, create a spatial tracking session.

- Configure for Plane Anchor Tracking: Set up a configuration for plane anchor tracking.

- Run the Session: Run the session with the configuration to prompt for authorization to plane anchored transforms.

- Add an Anchor: Specify a horizontal alignment for a target plane with floor classification to create a floor anchor entity to track.

- Add the Floor Anchor to the Reality View: Add the floor anchor to the reality view content in the immersive space.

For more detailed information, you can refer to the session Dive deep into volumes and immersive spaces (26:35).

Additionally, if you are working with object tracking, you can use the following steps:

- Provide a 3D Model: Provide a 3D model representing the real-world object you want to track.

- Create an Anchoring Component: Use RealityKit APIs to create your anchoring component at runtime.

- Augment with Virtual Content: Once the object is tracked, you can augment it with virtual content, such as labels or interactive elements.

For more details on object tracking, you can refer to the session Explore object tracking for visionOS (09:28).

Relevant Sessions

Create enhanced spatial computing experiences with ARKit

Learn how to create captivating immersive experiences with ARKit’s latest features. Explore ways to use room tracking and object tracking to further engage with your surroundings. We’ll also share how your app can react to changes in your environment’s lighting on this platform. Discover improvements in hand tracking and plane detection which can make your spatial experiences more intuitive.

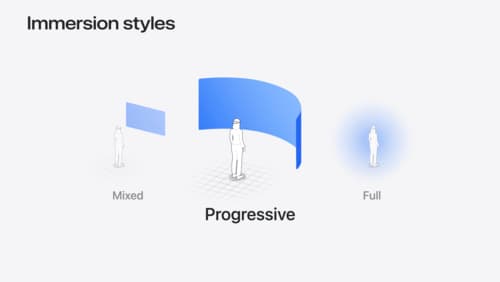

Dive deep into volumes and immersive spaces

Discover powerful new ways to customize volumes and immersive spaces in visionOS. Learn to fine-tune how volumes resize and respond to people moving around them. Make volumes and immersive spaces interact through the power of coordinate conversions. Find out how to make your app react when people adjust immersion with the Digital Crown, and use a surrounding effect to dynamically customize the passthrough tint in your immersive space experience.

Explore object tracking for visionOS

Find out how you can use object tracking to turn real-world objects into virtual anchors in your visionOS app. Learn how you can build spatial experiences with object tracking from start to finish. Find out how to create a reference object using machine learning in Create ML and attach content relative to your target object in Reality Composer Pro, RealityKit or ARKit APIs.