what's the difference between arkit and realitykit

Asked on 2024-07-31

1 search

The primary difference between ARKit and RealityKit lies in their focus and capabilities:

-

ARKit:

- Purpose: ARKit is primarily designed for augmented reality (AR) experiences. It provides a suite of tools and APIs to help developers create AR applications by understanding the real-world environment and integrating virtual content into it.

- Capabilities: ARKit includes features like scene understanding, room tracking, plane detection, object tracking, and hand tracking. These capabilities allow ARKit to detect and interact with the physical world, making it ideal for applications that require precise tracking and interaction with real-world objects.

- Use Cases: Examples include apps that need to detect hand gestures, track objects, or understand the layout of a room to place virtual objects accurately.

-

RealityKit:

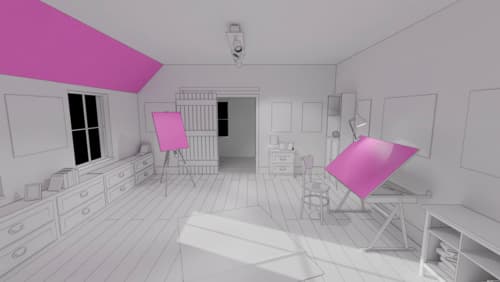

- Purpose: RealityKit is a high-performance 3D simulation and rendering framework. It is designed to create immersive spatial experiences by blending 3D content seamlessly with the real world.

- Capabilities: RealityKit offers advanced rendering capabilities, including support for realistic materials, virtual lighting, and dynamic models and textures. It also includes tools for creating complex animations, such as blend shapes and inverse kinematics. RealityKit is used in applications like Reality Composer Pro and powers VisionOS.

- Use Cases: It is ideal for creating rich, interactive 3D content and spatial computing apps, such as games or interactive simulations that require high-quality rendering and complex animations.

For more detailed information on RealityKit, you can refer to the session Discover RealityKit APIs for iOS, macOS and visionOS.

Relevant Sessions

What’s new in USD and MaterialX

Explore updates to Universal Scene Description and MaterialX support on Apple platforms. Discover how these technologies provide a foundation for 3D content creation and delivery, and learn how they can help streamline your workflows for creating great spatial experiences. Learn about USD and MaterialX support in RealityKit and Storm, advancements in our system-provided tooling, and more.

Build a spatial drawing app with RealityKit

Harness the power of RealityKit through the process of building a spatial drawing app. As you create an eye-catching spatial experience that integrates RealityKit with ARKit and SwiftUI, you’ll explore how resources work in RealityKit and how to use features like low-level mesh and texture APIs to achieve fast updates of the users’ brush strokes.

Create enhanced spatial computing experiences with ARKit

Learn how to create captivating immersive experiences with ARKit’s latest features. Explore ways to use room tracking and object tracking to further engage with your surroundings. We’ll also share how your app can react to changes in your environment’s lighting on this platform. Discover improvements in hand tracking and plane detection which can make your spatial experiences more intuitive.