Capture video input on Mac

Asked on 2024-07-31

1 search

To capture video input on a Mac, you can use the AV Foundation framework. Here's a basic outline of the steps involved:

-

Create an AV capture session: This session coordinates the data flow between the input (camera) and the output (file).

-

Create an AV capture device: Set it to the default system preferred camera.

-

Add an input to the session: This represents the input from the capture device.

-

Add an output to the session: This manages the output and writes it to disk.

-

Commit the configuration and start running the session.

Here's a snippet of code to illustrate this process:

import AVFoundation

let captureSession = AVCaptureSession()

guard let captureDevice = AVCaptureDevice.default(for: .video) else { return }

let input = try AVCaptureDeviceInput(device: captureDevice)

captureSession.addInput(input)

let output = AVCaptureMovieFileOutput()

captureSession.addOutput(output)

captureSession.startRunning()

let outputURL = URL(fileURLWithPath: "path/to/output.mov")

output.startRecording(to: outputURL, recordingDelegate: self)

To extend this code to record spatial video, you need to:

-

Change the AV capture device: Use the built-in dual wide camera for spatial video.

-

Pick a supported video format: Iterate through the formats in the video device and check if spatial video capture is supported.

-

Enable spatial video recording on the output.

Here's an example of how to modify the code for spatial video:

// Change the AV capture device

let captureDevice = AVCaptureDevice.default(.builtInDualWideCamera, for: .video, position: .back)

// Pick a supported video format

for format in captureDevice.formats {

if format.isSpatialVideoCaptureSupported {

try captureDevice.lockForConfiguration()

captureDevice.activeFormat = format

captureDevice.unlockForConfiguration()

break

}

}

// Enable spatial video recording

output.isSpatialVideoCaptureEnabled = true

For more detailed information, you can refer to the session Build compelling spatial photo and video experiences from WWDC 2024.

Relevant Sessions

Discover area mode for Object Capture

Discover how area mode for Object Capture enables new 3D capture possibilities on iOS by extending the functionality of Object Capture to support capture and reconstruction of an area. Learn how to optimize the quality of iOS captures using the new macOS sample app for reconstruction, and find out how to view the final results with Quick Look on Apple Vision Pro, iPhone, iPad or Mac. Learn about improvements to 3D reconstruction, including a new API that allows you to create your own custom image processing pipelines.

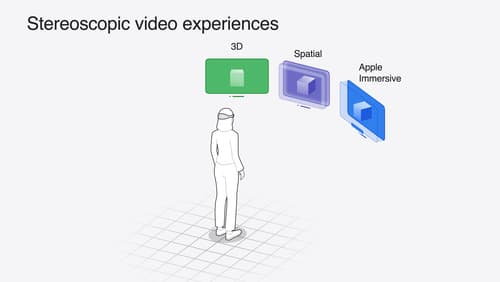

Build compelling spatial photo and video experiences

Learn how to adopt spatial photos and videos in your apps. Explore the different types of stereoscopic media and find out how to capture spatial videos in your iOS app on iPhone 15 Pro. Discover the various ways to detect and present spatial media, including the new QuickLook Preview Application API in visionOS. And take a deep dive into the metadata and stereo concepts that make a photo or video spatial.

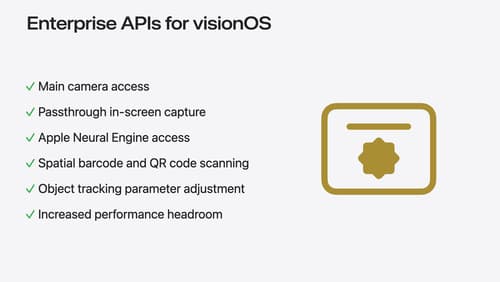

Introducing enterprise APIs for visionOS

Find out how you can use new enterprise APIs for visionOS to create spatial experiences that enhance employee and customer productivity on Apple Vision Pro.