how can i leverage on device transcriptions of podcasts in my own app?

Asked on 2024-07-31

1 search

To leverage on-device transcriptions of podcasts in your app, you can utilize Apple's machine learning APIs, which include capabilities for converting speech to text. Here are the steps and relevant sessions that can help you get started:

-

Explore Machine Learning on Apple Platforms:

- This session introduces various ML-powered APIs that can help you segment and understand natural language, convert speech to text, and more. You can find more details in the chapter on ML-powered APIs.

- Explore machine learning on Apple platforms (03:55)

-

Meet the Translation API:

- Although primarily focused on translation, this session also covers how to integrate language models and manage language assets on-device, which can be useful for handling transcriptions.

- Meet the Translation API (00:00)

-

Using Translation APIs:

- The session provides an overview of how to use translation APIs, which can be adapted for transcription purposes. It includes examples of how to add translation features to your app, which can be similar to adding transcription features.

- Meet the Translation API (02:10)

By following these sessions, you can learn how to integrate on-device transcription capabilities into your app, leveraging Apple's machine learning frameworks and APIs.

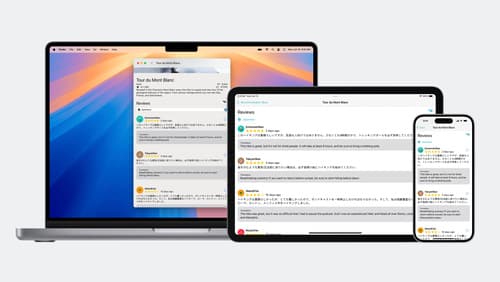

Meet the Translation API

Discover how you can translate text across different languages in your app using the new Translation framework. We’ll show you how to quickly display translations in the system UI, and how to translate larger batches of text for your app’s UI.

Explore machine learning on Apple platforms

Get started with an overview of machine learning frameworks on Apple platforms. Whether you’re implementing your first ML model, or an ML expert, we’ll offer guidance to help you select the right framework for your app’s needs.