How do I transform an entity with gestures?

Asked on 2024-07-29

1 search

To transform an entity with gestures in visionOS, you can use a variety of system gestures such as pinch and hold, pinch and drag, and combinations of gestures. Here are the steps and considerations based on the content from the WWDC sessions:

-

System Gestures:

- Pinch and Hold: This gesture can be used to activate an object, allowing you to move your hand to control the object's direction and force. For example, in the game "What the Golf," you can use a pinch and hold gesture to control the ball's direction and force (Explore game input in visionOS).

- Combination Gestures: You can combine gestures like magnify and rotate to allow seamless transitions between multiple gestures. This is useful for actions like resizing and rotating objects simultaneously (Explore game input in visionOS).

-

Implementation:

- Input Target and Collision Components: Ensure that any entities you want to be tappable have an input target component and a collision component. Attach a gesture to the reality view containing your entities and indicate that any entity within that view is tappable (Explore game input in visionOS).

- Gesture Handlers: Once a gesture is detected, respond to it in the gesture handlers. For Unity-based games, use the Unity input system to detect and respond to gestures (Explore game input in visionOS).

-

Custom Gestures:

- If system gestures do not cover all your needs, you can define custom gestures. Ensure they are easy for players to learn and remember, and provide appropriate feedback with visual effects and sounds (Explore game input in visionOS).

-

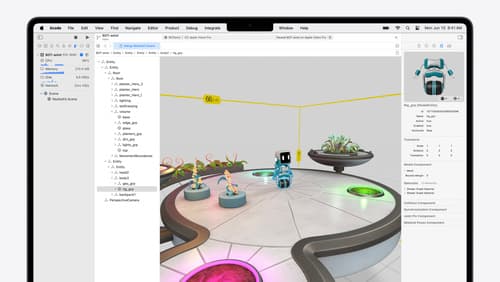

RealityKit Debugger:

- Use the RealityKit debugger to troubleshoot and fix any issues with transformations. For example, if scaling an entity affects its descendants unintentionally, you can adjust the hierarchy to fix the problem (Break into the RealityKit debugger).

For more detailed information, you can refer to the following sessions:

These sessions provide comprehensive guidance on using gestures to transform entities and troubleshooting any related issues.

Break into the RealityKit debugger

Meet the RealityKit debugger and discover how this new tool lets you inspect the entity hierarchy of spatial apps, debug rogue transformations, find missing entities, and detect which parts of your code are causing problems for your systems.

Explore object tracking for visionOS

Find out how you can use object tracking to turn real-world objects into virtual anchors in your visionOS app. Learn how you can build spatial experiences with object tracking from start to finish. Find out how to create a reference object using machine learning in Create ML and attach content relative to your target object in Reality Composer Pro, RealityKit or ARKit APIs.

Compose interactive 3D content in Reality Composer Pro

Discover how the Timeline view in Reality Composer Pro can bring your 3D content to life. Learn how to create an animated story in which characters and objects interact with each other and the world around them using inverse kinematics, blend shapes, and skeletal poses. We’ll also show you how to use built-in and custom actions, sequence your actions, apply triggers, and implement natural movements.