How do I cr

Asked on 2024-08-01

1 search

It looks like your question was cut off. However, based on the context provided, here are some insights on various topics covered at WWDC:

Sessions Mentioned

- What’s new in Create ML

- Explore object tracking for visionOS

- What’s new in Quick Look for visionOS

- Optimize for the spatial web

- Create custom environments for your immersive apps in visionOS

Key Topics

Create ML

- App Enhancements: The CreateML app on Mac allows you to create models for predicting content in images, videos, or tabular data, and for detecting objects, sounds, human actions, and activities. (What’s new in Create ML)

- Object Tracking: A new template for building machine learning models to track the spatial location and orientation of objects. (What’s new in Create ML)

VisionOS

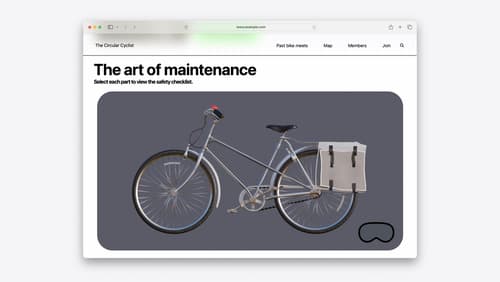

- Object Tracking: You can create immersive experiences by anchoring virtual content to reference objects using Reality Composer Pro and RealityKit APIs. (Explore object tracking for visionOS)

- 3D Preview Enhancements: Quick Look now supports snapping 3D models to horizontal surfaces like tables or floors, making it easier to place and manipulate models. (What’s new in Quick Look for visionOS)

Spatial Web

- 3D Asset Generation: You can create 3D captures using your phone and apps like Reality Composer, and convert models into the required format using built-in tools in Preview on Mac. (Optimize for the spatial web)

Custom Environments

- Export Workflow: Exporting 3D scenes from tools like Blender to Reality Composer Pro for further manipulation and integration into immersive apps. (Create custom environments for your immersive apps in visionOS)

If you have a more specific question or need details on a particular topic, feel free to ask!

Explore object tracking for visionOS

Find out how you can use object tracking to turn real-world objects into virtual anchors in your visionOS app. Learn how you can build spatial experiences with object tracking from start to finish. Find out how to create a reference object using machine learning in Create ML and attach content relative to your target object in Reality Composer Pro, RealityKit or ARKit APIs.

What’s new in Create ML

Explore updates to Create ML, including interactive data source previews and a new template for building object tracking models for visionOS apps. We’ll also cover important framework improvements, including new time-series forecasting and classification APIs.

Optimize for the spatial web

Discover how to make the most of visionOS capabilities on the web. Explore recent updates like improvements to selection highlighting, and the ability to present spatial photos and panorama images in fullscreen. Learn to take advantage of existing web standards for dictation and text-to-speech with WebSpeech, spatial soundscapes with WebAudio, and immersive experiences with WebXR.