How does this system work?

Asked on 2024-08-01

1 search

The system described in the context works by using entities and components within RealityKit. Here's a breakdown of how it functions:

-

Entities and Components: Entities are assigned various components that hold data. Systems then perform updates on entities that have specific components.

-

Teleportation System Example:

- The teleportation system stores its data in a control center component.

- Every update, the system decreases a countdown value.

- When the countdown reaches zero, it finds all entities with a teleporter component and picks a random one to spawn a robot.

- The counter is then reset, and the process repeats until the club is at capacity.

-

Debugging with RealityKit:

- If an entity's components are misconfigured or missing, the system's behavior becomes unpredictable.

- The RealityKit debugger captures the app state at the moment you hit pause, allowing you to inspect and modify components.

- For example, if a countdown value isn't changing, it might be because the updated component wasn't saved back to the entity.

-

Audio System in RealityKit:

- Custom audio systems can read values (like throttle) and map them to audio properties (like decibels).

- Audio playback can be spatial by default, and you can configure spatial, ambient, and channel audio components on entities.

For more detailed information, you can refer to the following sessions:

- Break into the RealityKit debugger (00:07:34)

- Enhance your spatial computing app with RealityKit audio (00:07:17)

Relevant Sessions

- Break into the RealityKit debugger

- Enhance your spatial computing app with RealityKit audio

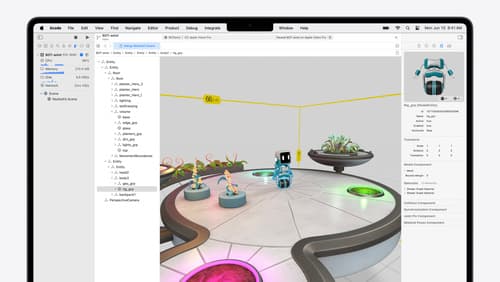

Break into the RealityKit debugger

Meet the RealityKit debugger and discover how this new tool lets you inspect the entity hierarchy of spatial apps, debug rogue transformations, find missing entities, and detect which parts of your code are causing problems for your systems.

Say hello to the next generation of CarPlay design system

Explore the design system at the heart of the next generation of CarPlay that allows each automaker to express your vehicle’s character and brand. Learn how gauges, layouts, dynamic content, and more are deeply customizable and adaptable, allowing you to express your own design philosophy and create an iconic, tailored look. This session is intended for automakers, system developers, and anyone designing a system that supports the next generation of CarPlay.

Extend your app’s controls across the system

Bring your app’s controls to Control Center, the Lock Screen, and beyond. Learn how you can use WidgetKit to extend your app’s controls to the system experience. We’ll cover how you can to build a control, tailor its appearance, and make it configurable.