what apis does apple ai have

Asked on 2024-08-01

1 search

Apple provides a variety of APIs and frameworks for integrating AI and machine learning into your apps. Here are some of the key APIs and frameworks mentioned:

- Vision Framework: Offers capabilities for visual intelligence, including text extraction, face detection, body pose recognition, and more. It now includes a new Swift API with Swift 6 support and introduces hand pose detection and body pose requests.

- Natural Language Processing: APIs for understanding and processing natural language.

- Sound Analysis: APIs for analyzing sound.

- Speech Understanding: APIs for understanding speech.

- Create ML: Allows you to train or fine-tune models using additional data.

- Core ML: For deploying machine learning models on Apple devices.

- MLX: An open-source tool designed by Apple machine learning researchers for other researchers, built on a unified memory model for efficient operations across CPU and GPU.

- Apple Neural Engine: Provides advanced machine learning capabilities and enhanced object tracking.

- App Intents Toolbox: Helps in understanding app capabilities and taking actions on behalf of the user.

For more detailed information, you can refer to the following sessions:

These sessions cover the various APIs and frameworks in detail, including how to integrate them into your apps and leverage Apple's AI and machine learning capabilities.

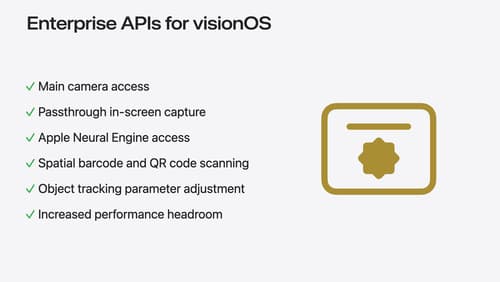

Introducing enterprise APIs for visionOS

Find out how you can use new enterprise APIs for visionOS to create spatial experiences that enhance employee and customer productivity on Apple Vision Pro.

Platforms State of the Union

Discover the newest advancements on Apple platforms.

Explore machine learning on Apple platforms

Get started with an overview of machine learning frameworks on Apple platforms. Whether you’re implementing your first ML model, or an ML expert, we’ll offer guidance to help you select the right framework for your app’s needs.