How do I use a realityview?

Asked on 2024-08-01

1 search

To use a RealityView in visionOS, you can follow these steps:

-

Create a New Project: Start by creating a new Xcode project using the VisionOS app template. This will automatically create a default scene that you can open in Reality Composer Pro.

-

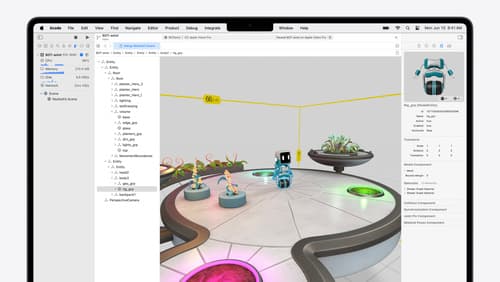

Edit in Reality Composer Pro: Open the default scene in Reality Composer Pro. You can delete the default sphere and create an empty transform entity. Add an anchoring component to this entity, which will serve as the container for your object anchor.

-

Add Virtual Content: You can add various virtual content to your scene using Reality Composer Pro. For example, you can add a video dock preset or other 3D models to your scene. The inspector in Reality Composer Pro allows you to edit properties such as position and rotation of these entities.

-

Implement Object Tracking: To facilitate object tracking, you can introduce a new target named object and associate it with your anchoring component. You can use the RealityKit API to check the entity's

isAnchoredstate and decide what to display in both cases. -

Add SwiftUI Elements: With RealityView attachments, you can place SwiftUI elements on RealityKit anchor entities. Define the SwiftUI elements in the attachments section under RealityView, and then in the scene setup, find this UI entity and add it as a child node.

For more detailed steps and examples, you can refer to the following sessions from WWDC 2024:

- Explore object tracking for visionOS (09:38)

- Enhance the immersion of media viewing in custom environments (04:02)

These sessions provide comprehensive guidance on using Reality Composer Pro and RealityKit APIs to create and manage immersive experiences in visionOS.

Discover RealityKit APIs for iOS, macOS and visionOS

Learn how new cross-platform APIs in RealityKit can help you build immersive apps for iOS, macOS, and visionOS. Check out the new hover effects, lights and shadows, and portal crossing features, and view them in action through real examples.

Break into the RealityKit debugger

Meet the RealityKit debugger and discover how this new tool lets you inspect the entity hierarchy of spatial apps, debug rogue transformations, find missing entities, and detect which parts of your code are causing problems for your systems.

Enhance the immersion of media viewing in custom environments

Extend your media viewing experience using Reality Composer Pro components like Docking Region, Reverb, and Virtual Environment Probe. Find out how to further enhance immersion using Reflections, Tint Surroundings Effect, SharePlay, and the Immersive Environment Picker.