how do I get real time hand tracking data from VisionOS

Asked on 2024-07-29

1 search

To get real-time hand tracking data from VisionOS, you can use the hand tracking provider in ARKit. Here are the steps and relevant details:

-

Hand Tracking Provider: VisionOS allows you to track a person's hands and fingers. You can either pull the hand tracking provider for the latest updates or receive hand anchors asynchronously as they become available. The hand tracking provider now delivers data at display rate, which minimizes latency.

-

Hand Anchors: You can use hand anchors to detect gestures and anchor virtual content to the user's hands. This can be done using ARKit or RealityKit.

-

Hands Prediction API: For minimal delay in hand tracking results, ARKit can predict hand anchors expected to exist in the near future. This is particularly useful if your app renders with compositor services or RealityKit.

-

Setting Up in RealityKit: In RealityKit, you can use anchor entities to affix a RealityKit entity to an ARAnchor. For example, you can create anchor entities for each hand, such as the thumb tip and index fingertip.

For more detailed information, you can refer to the following sessions and chapters:

- Create enhanced spatial computing experiences with ARKit (Hand tracking)

- Build a spatial drawing app with RealityKit (Set up spatial tracking)

These sessions provide comprehensive guidance on how to implement and utilize hand tracking in VisionOS.

Discover RealityKit APIs for iOS, macOS and visionOS

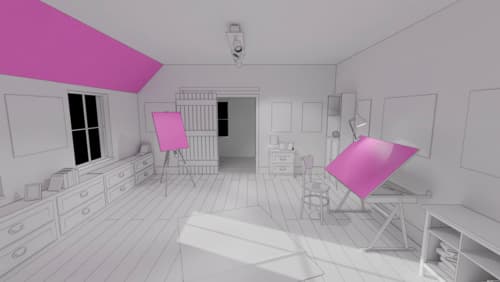

Learn how new cross-platform APIs in RealityKit can help you build immersive apps for iOS, macOS, and visionOS. Check out the new hover effects, lights and shadows, and portal crossing features, and view them in action through real examples.

Build immersive web experiences with WebXR

Discover how WebXR empowers you to add fully immersive experiences to your website in visionOS. Find out how to build WebXR experiences that take full advantage of the input capabilities of visionOS, and learn how you can use Simulator to test WebXR experiences on macOS.

Create enhanced spatial computing experiences with ARKit

Learn how to create captivating immersive experiences with ARKit’s latest features. Explore ways to use room tracking and object tracking to further engage with your surroundings. We’ll also share how your app can react to changes in your environment’s lighting on this platform. Discover improvements in hand tracking and plane detection which can make your spatial experiences more intuitive.