What are big new APIs announced that can be used in an iPhone app?

Asked on 2024-08-01

1 search

Here are some of the big new APIs announced at WWDC that can be used in an iPhone app:

-

Apple Intelligence: This is a new personal intelligence system that brings powerful generative models to iOS, iPadOS, and macOS. It includes capabilities for understanding and generating language and images, and helping users take actions with rich awareness of users' personal context. This is deeply integrated into features and apps across the system (Platforms State of the Union).

-

Controls API: This new API allows developers to create controls that can toggle settings, execute actions, or deep link to specific experiences. These controls can be added to the Control Center or assigned to the action button on the iPhone 15 Pro (Platforms State of the Union).

-

RealityKit 4: RealityKit has been updated to support iOS, iPadOS, and macOS, making it easier to build 3D and spatial experiences across these platforms. It includes new capabilities for rendering 3D models with various styles and integrates with Reality Composer Pro (Platforms State of the Union).

-

Vision Framework: The Vision framework has been enhanced with a new Swift API and support for hand pose detection, body pose requests, and aesthetic score requests. This makes it easier to integrate visual intelligence capabilities into apps (Explore machine learning on Apple platforms).

-

Translation API: A new framework for language translation has been introduced, allowing apps to perform direct language-to-language translation with a simple UI or through an API that can translate text and display the output in any UI (Explore machine learning on Apple platforms).

These APIs provide powerful new capabilities for iPhone apps, enabling developers to create more intelligent, customizable, and immersive experiences.

Explore machine learning on Apple platforms

Get started with an overview of machine learning frameworks on Apple platforms. Whether you’re implementing your first ML model, or an ML expert, we’ll offer guidance to help you select the right framework for your app’s needs.

Platforms State of the Union

Discover the newest advancements on Apple platforms.

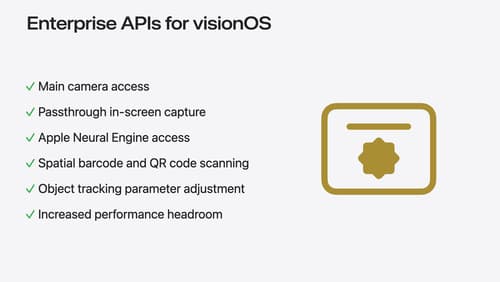

Introducing enterprise APIs for visionOS

Find out how you can use new enterprise APIs for visionOS to create spatial experiences that enhance employee and customer productivity on Apple Vision Pro.