How do I fit a RealityKit Entity to the bounds of the view?

Asked on 2024-08-01

1 search

To fit a RealityKit Entity to the bounds of the view, you can use the RealityKit debugger to inspect and adjust the transformations of your entities. Here are some steps and tips based on the sessions from WWDC:

-

Inspect the Entity: Use the RealityKit debugger to inspect your entity and its transformations. This can help you understand how the entity is currently positioned and scaled within the scene.

- Break into the RealityKit debugger (00:01:48)

-

Adjust Transformations: Ensure that the transformations (position, orientation, and scale) of the entity are set correctly. You might need to adjust these transformations to fit the entity within the bounds of the view.

- Break into the RealityKit debugger (00:03:46)

-

Hierarchy Considerations: Be mindful of the transformation hierarchies. The final placement of an entity is a combination of its own transformations and those of all its ancestors. Adjusting the hierarchy might be necessary to achieve the desired fit.

- Break into the RealityKit debugger (00:04:44)

-

Use Anchor Entities: If your app uses an immersive space, you can anchor RealityKit content to the world using anchor entities. This can help in setting up the correct positioning and scaling.

- Build a spatial drawing app with RealityKit (00:05:07)

By following these steps and utilizing the RealityKit debugger, you can effectively fit a RealityKit Entity to the bounds of the view.

Build a spatial drawing app with RealityKit

Harness the power of RealityKit through the process of building a spatial drawing app. As you create an eye-catching spatial experience that integrates RealityKit with ARKit and SwiftUI, you’ll explore how resources work in RealityKit and how to use features like low-level mesh and texture APIs to achieve fast updates of the users’ brush strokes.

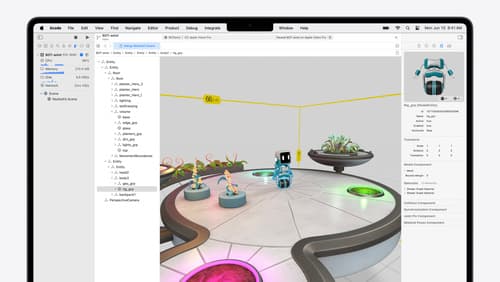

Break into the RealityKit debugger

Meet the RealityKit debugger and discover how this new tool lets you inspect the entity hierarchy of spatial apps, debug rogue transformations, find missing entities, and detect which parts of your code are causing problems for your systems.

Explore object tracking for visionOS

Find out how you can use object tracking to turn real-world objects into virtual anchors in your visionOS app. Learn how you can build spatial experiences with object tracking from start to finish. Find out how to create a reference object using machine learning in Create ML and attach content relative to your target object in Reality Composer Pro, RealityKit or ARKit APIs.