What’ s the format of the file to train a multi image recognition neural network?

Asked on 2024-08-02

1 search

The format of the file to train a multi-image recognition neural network can vary depending on the specific requirements and tools you are using. However, based on the context provided from the WWDC sessions, here are some key points:

-

Data Formats: Common data formats for training models include 32-bit and 16-bit floating point numbers. These formats are useful for updating parameters during training. After training, quantization techniques can be used to reduce memory requirements by representing values as 8-bit integers, which helps in reducing the model size and improving throughput (Train your machine learning and AI models on Apple GPUs).

-

Annotations and Data Sources: When building image-based models in the CreateML app, it is important to verify that your annotations match your expectations. You can explore your data sources and visualize annotations to ensure they are correct before starting the training (What’s new in Create ML).

-

3D Models for Object Tracking: For object tracking, a 3D model representing the real-world object is required. This 3D asset needs to be in the USDZ file format to ensure the best results (Explore object tracking for visionOS).

-

Core ML Format: After training your model, you can convert it to the Core ML format using Core ML tools. This format is optimized for Apple hardware and supports various compression techniques (Explore machine learning on Apple platforms).

Relevant Sessions

- Train your machine learning and AI models on Apple GPUs

- What’s new in Create ML

- Explore object tracking for visionOS

- Explore machine learning on Apple platforms

These sessions provide detailed insights into the data formats, tools, and workflows for training multi-image recognition neural networks on Apple platforms.

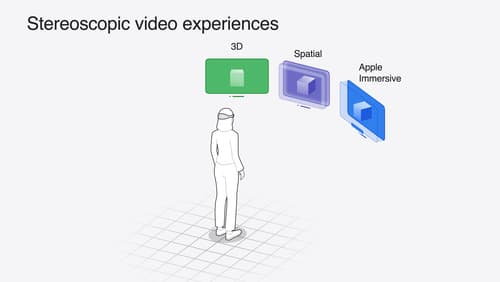

Build compelling spatial photo and video experiences

Learn how to adopt spatial photos and videos in your apps. Explore the different types of stereoscopic media and find out how to capture spatial videos in your iOS app on iPhone 15 Pro. Discover the various ways to detect and present spatial media, including the new QuickLook Preview Application API in visionOS. And take a deep dive into the metadata and stereo concepts that make a photo or video spatial.

Explore machine learning on Apple platforms

Get started with an overview of machine learning frameworks on Apple platforms. Whether you’re implementing your first ML model, or an ML expert, we’ll offer guidance to help you select the right framework for your app’s needs.

Discover area mode for Object Capture

Discover how area mode for Object Capture enables new 3D capture possibilities on iOS by extending the functionality of Object Capture to support capture and reconstruction of an area. Learn how to optimize the quality of iOS captures using the new macOS sample app for reconstruction, and find out how to view the final results with Quick Look on Apple Vision Pro, iPhone, iPad or Mac. Learn about improvements to 3D reconstruction, including a new API that allows you to create your own custom image processing pipelines.