how to make Dynamic Island

Asked on 2024-08-02

1 search

Creating a feature similar to Dynamic Island involves a combination of UI design and interaction principles. While the specific implementation details for Dynamic Island were not covered in the provided WWDC sessions, you can leverage some of the concepts and APIs discussed in the sessions to create dynamic and interactive UI elements.

Here are some relevant sessions and concepts that might help you:

-

Discover RealityKit APIs for iOS, macOS, and visionOS

- This session covers various APIs that can be used to create dynamic and interactive elements in your app. For example, you can use force effects and joints to add physics-based interactions, and dynamic lights to enhance the visual appeal of your UI.

- Discover RealityKit APIs for iOS, macOS, and visionOS (09:05)

-

Build a spatial drawing app with RealityKit

- This session discusses how to build a spatial user interface, which can be useful for creating interactive and dynamic UI elements. It also covers how to generate 3D models from 2D vector content, which can be useful for creating custom UI components.

- Build a spatial drawing app with RealityKit (05:42)

-

Dive deep into volumes and immersive spaces

- This session explores how to create immersive spaces and dynamic scaling, which can be useful for creating UI elements that adapt to different contexts and user interactions.

- Dive deep into volumes and immersive spaces (16:52)

-

Optimize your 3D assets for spatial computing

- This session provides insights into optimizing 3D assets, which can be useful if your dynamic UI elements involve 3D models. It also covers the setup of sky domes and image-based lighting, which can enhance the visual quality of your UI.

- Optimize your 3D assets for spatial computing (15:07)

By combining these concepts and APIs, you can create dynamic and interactive UI elements that enhance the user experience, similar to the Dynamic Island feature.

Explore object tracking for visionOS

Find out how you can use object tracking to turn real-world objects into virtual anchors in your visionOS app. Learn how you can build spatial experiences with object tracking from start to finish. Find out how to create a reference object using machine learning in Create ML and attach content relative to your target object in Reality Composer Pro, RealityKit or ARKit APIs.

Optimize your 3D assets for spatial computing

Dive into an end-to-end workflow for optimized 3D asset creation. Discover best practices for optimizing meshes, materials, and textures in your digital content creation tool. Learn how to harness shader graph, baking, and material instances to enhance your 3D scene while optimizing performance. Take advantage of native tools to work more effectively with your assets and improve your app’s performance.

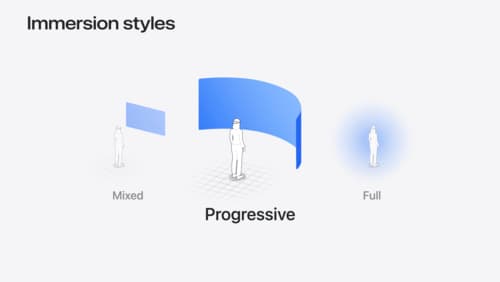

Dive deep into volumes and immersive spaces

Discover powerful new ways to customize volumes and immersive spaces in visionOS. Learn to fine-tune how volumes resize and respond to people moving around them. Make volumes and immersive spaces interact through the power of coordinate conversions. Find out how to make your app react when people adjust immersion with the Digital Crown, and use a surrounding effect to dynamically customize the passthrough tint in your immersive space experience.