What is combine?

Asked on 2024-08-02

1 search

Combine is not mentioned in the provided context. If you have any other questions about the content presented by Apple at WWDC, feel free to ask! Here are some of the sessions mentioned in the context:

- Bring your machine learning and AI models to Apple silicon

- Deploy machine learning and AI models on-device with Core ML

- Support real-time ML inference on the CPU

- Demystify explicitly built modules

If you need information on any specific topic or session, let me know!

Demystify explicitly built modules

Explore how builds are changing in Xcode 16 with explicitly built modules. Discover how modules are used to build your code, how explicitly built modules improve transparency in compilation tasks, and how you can optimize your build by sharing modules across targets.

Bring your machine learning and AI models to Apple silicon

Learn how to optimize your machine learning and AI models to leverage the power of Apple silicon. Review model conversion workflows to prepare your models for on-device deployment. Understand model compression techniques that are compatible with Apple silicon, and at what stages in your model deployment workflow you can apply them. We’ll also explore the tradeoffs between storage size, latency, power usage and accuracy.

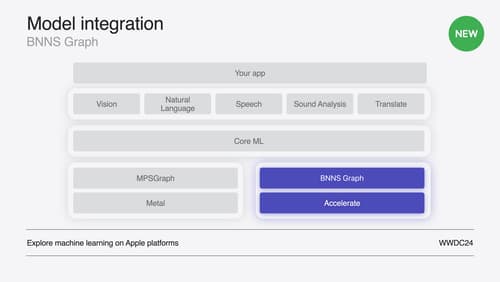

Support real-time ML inference on the CPU

Discover how you can use BNNSGraph to accelerate the execution of your machine learning model on the CPU. We will show you how to use BNNSGraph to compile and execute a machine learning model on the CPU and share how it provides real-time guarantees such as no runtime memory allocation and single-threaded running for audio or signal processing models.