How can you get a text description of voice recordings?

Asked on 2024-08-02

1 search

To get a text description of voice recordings, you can use the Web Speech API, which is a proposed web standard that allows you to respond to voice in real-time through the speech recognition interface. This API processes speech locally on the device, ensuring that no data needs to be sent from the device to make it happen. Here's a brief overview of how it works:

- Voice Input: Users can use voice input for any text field by tapping the microphone icon that appears with the keyboard.

- Speech Recognition: The Web Speech API allows you to register a handler to listen for result events. When an event is received, it contains a result list of all the snippets the recognizer has picked up so far. You can then extract the transcript from these results.

For more details, you can refer to the session Optimize for the spatial web.

Additionally, Apple provides frameworks that can convert speech to text and analyze natural language, which can be useful for more advanced use cases. You can explore these capabilities in the session Explore machine learning on Apple platforms.

Relevant Sessions

Catch up on accessibility in SwiftUI

SwiftUI makes it easy to build amazing experiences that are accessible to everyone. We’ll discover how assistive technologies understand and navigate your app through the rich accessibility elements provided by SwiftUI. We’ll also discuss how you can further customize these experiences by providing more information about your app’s content and interactions by using accessibility modifiers.

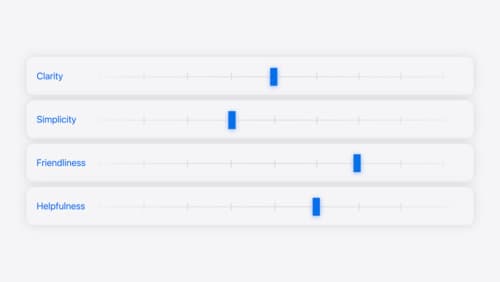

Add personality to your app through UX writing

Every app has a personality that comes across in what you say — and how you say it. Learn how to define your app’s voice and modulate your tone for every situation, from celebratory notifications to error messages. We’ll help you get specific about your app’s purpose and audience and practice writing in different tones.

Explore machine learning on Apple platforms

Get started with an overview of machine learning frameworks on Apple platforms. Whether you’re implementing your first ML model, or an ML expert, we’ll offer guidance to help you select the right framework for your app’s needs.