What’s new in AI?

Asked on 2024-08-02

1 search

Apple introduced several new advancements in AI at WWDC 2024, primarily under the umbrella of "Apple Intelligence." Here are the key highlights:

-

Apple Intelligence:

- Apple Intelligence is a personal intelligence system that integrates powerful generative models into iOS, iPadOS, and macOS. It enhances the ability to understand and generate language and images, helping users take actions with rich awareness of personal context. This system is built with privacy from the ground up (Platforms State of the Union).

-

Machine Learning Frameworks:

- Apple’s built-in machine learning frameworks offer capabilities across natural language processing, sound analysis, speech understanding, and vision intelligence. The Vision framework, in particular, is getting a new Swift API. Developers can also use Create ML to bring in additional data for training and import and run on-device AI models such as large language or diffusion models (Platforms State of the Union).

-

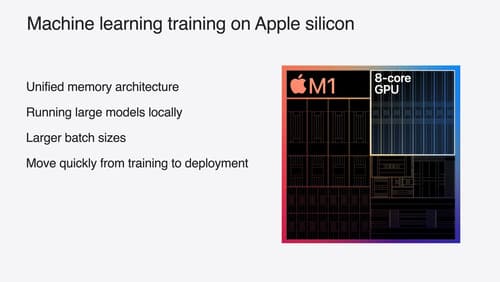

Training on Apple Silicon:

- Apple Silicon's hardware acceleration and unified memory are optimized for training models using frameworks like PyTorch, TensorFlow, JAX, or MLX. This allows for efficient training and deployment of AI models on Apple devices (Platforms State of the Union).

-

Siri Enhancements:

- Siri has been improved with new large language models, making it sound more natural, contextually relevant, and personal. Siri can now understand on-screen content and take actions based on it, and it has richer language understanding capabilities (Bring your app to Siri).

-

System-Level Features:

- New system-level features powered by Apple Intelligence include writing tools that help users communicate more effectively by rewriting text for tone and clarity, proofreading, and summarizing key points. Image Playground allows for easy integration of image creation features into apps without the need for training models (Explore machine learning on Apple platforms).

-

Generative Tools in Xcode:

- Generative intelligence is transforming the way developers write code. New intelligence capabilities are being added to Xcode, helping developers write Swift code, create experiences with SwiftUI, and get performance insights using instruments (Platforms State of the Union).

For more detailed information, you can explore the following sessions:

Platforms State of the Union

Discover the newest advancements on Apple platforms.

Train your machine learning and AI models on Apple GPUs

Learn how to train your models on Apple Silicon with Metal for PyTorch, JAX and TensorFlow. Take advantage of new attention operations and quantization support for improved transformer model performance on your devices.

Explore machine learning on Apple platforms

Get started with an overview of machine learning frameworks on Apple platforms. Whether you’re implementing your first ML model, or an ML expert, we’ll offer guidance to help you select the right framework for your app’s needs.