what changes have been make to vision kit?

Asked on 2024-08-02

1 search

Changes to Vision Kit

Several updates and enhancements have been made to Vision Kit, particularly in the context of Vision Pro and visionOS. Here are the key changes:

-

Scene Understanding Capabilities:

- Planes Detection: Planes can now be detected in all orientations, allowing for anchoring objects on various surfaces in your surroundings.

- Room Anchors: The concept of room anchors has been introduced, which considers the user's surroundings on a per-room basis. This includes the ability to detect a user's movement across rooms.

- Object Tracking API: A new object tracking API for visionOS allows you to attach content to individual objects found around the user, enabling new dimensions of interactivity. For example, you can attach virtual content like instructions to a physical object (Platforms State of the Union).

-

Known Object Tracking:

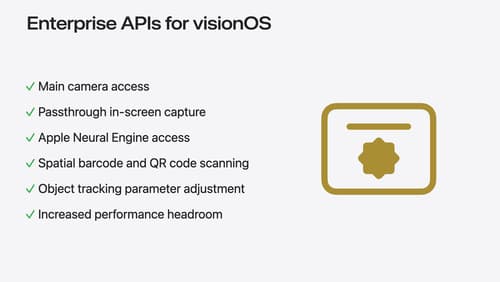

- Enhanced Known Object Tracking: This feature allows apps to have specific reference objects built into their project that they can then detect and track within their viewing area. For enterprise customers, this feature includes configurable parameters to tune and optimize object tracking, such as changing the maximum number of objects tracked at once and modifying the detection rate for identifying new instances of known objects (Introducing enterprise APIs for visionOS).

-

Spatial Barcode Scanning:

- Enterprise APIs: New enterprise APIs provide access to spatial barcode scanning, which can be used in specific workflow use cases to take advantage of spatial computing (Platforms State of the Union).

-

Swift Enhancements:

- New Vision API: The Vision framework has been updated to use async/await syntax, eliminating the need for completion handlers. Observations produced by requests are now returned directly from the perform call (Discover Swift enhancements in the Vision framework).

Relevant Sessions

- Platforms State of the Union

- Discover Swift enhancements in the Vision framework

- Introducing enterprise APIs for visionOS

These updates collectively enhance the capabilities of Vision Kit, making it more powerful and flexible for developers working on spatial computing applications.

Get started with HealthKit in visionOS

Discover how to use HealthKit to create experiences that take full advantage of the spatial canvas. Learn the capabilities of HealthKit on the platform, find out how to bring an existing iPadOS app to visionOS, and explore the special considerations governing HealthKit during a Guest User session. You’ll also learn ways to use SwiftUI, Swift Charts, and Swift concurrency to craft innovative experiences with HealthKit.

Introducing enterprise APIs for visionOS

Find out how you can use new enterprise APIs for visionOS to create spatial experiences that enhance employee and customer productivity on Apple Vision Pro.

Discover Swift enhancements in the Vision framework

The Vision Framework API has been redesigned to leverage modern Swift features like concurrency, making it easier and faster to integrate a wide array of Vision algorithms into your app. We’ll tour the updated API and share sample code, along with best practices, to help you get the benefits of this framework with less coding effort. We’ll also demonstrate two new features: image aesthetics and holistic body pose.