what changes have been made to human pose detection

Asked on 2024-08-02

1 search

Changes to human pose detection were discussed in the session Discover Swift enhancements in the Vision framework. Here are the key updates:

- Holistic Body Pose Detection: Previously, body and hand pose detection were separate requests. Now, the new holistic body pose feature allows for the detection of both hands and body together in a single request.

- Hand Pose Properties: The human body pose observation now includes two additional properties for the right hand and left hand observations.

These enhancements streamline the process of detecting human poses and provide more comprehensive data in a single request.

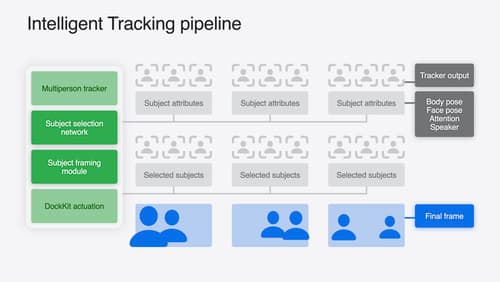

What’s new in DockKit

Discover how intelligent tracking in DockKit allows for smoother transitions between subjects. We will cover what intelligent tracking is, how it uses an ML model to select and track subjects, and how you can use it in your app.

Compose interactive 3D content in Reality Composer Pro

Discover how the Timeline view in Reality Composer Pro can bring your 3D content to life. Learn how to create an animated story in which characters and objects interact with each other and the world around them using inverse kinematics, blend shapes, and skeletal poses. We’ll also show you how to use built-in and custom actions, sequence your actions, apply triggers, and implement natural movements.

Introducing enterprise APIs for visionOS

Find out how you can use new enterprise APIs for visionOS to create spatial experiences that enhance employee and customer productivity on Apple Vision Pro.