How can I build a push to talk app?

Asked on 2024-08-02

1 search

To build a push-to-talk app, you can leverage several APIs and techniques discussed in various WWDC sessions. Here are the steps and relevant sessions that can help you:

-

Microphone Input:

- You need to capture microphone input. The session Capture HDR content with ScreenCaptureKit explains how to capture microphone audio using the

SCStreamAPI. You can configure the stream to capture the default microphone and handle the audio samples.

- You need to capture microphone input. The session Capture HDR content with ScreenCaptureKit explains how to capture microphone audio using the

-

Speech Recognition:

- For recognizing speech, you can use the Web Speech API. The session Optimize for the spatial web discusses how to create a speech recognition object and handle the results. This can be useful for converting spoken words into text.

-

Speech Synthesis:

- To provide audio feedback, you can use the Speech Synthesis API. The same session Optimize for the spatial web explains how to create speech synthesis utterance objects and use them to speak text aloud.

-

User Experience:

- Consider the user experience and how the app interacts with the user. The session Add personality to your app through UX writing provides exercises to define your app's voice and tone, which can be helpful in making your app more engaging.

-

Permissions:

- Ensure that you handle permissions properly. Users need to grant microphone access, and it's important to explain why the app needs this permission. This is briefly mentioned in the session Optimize for the spatial web.

Relevant Sessions:

- Capture HDR content with ScreenCaptureKit

- Optimize for the spatial web

- Add personality to your app through UX writing

By combining these techniques, you can build a functional and user-friendly push-to-talk app. If you need more specific details or code snippets, refer to the mentioned sessions for in-depth explanations and examples.

What’s new in DockKit

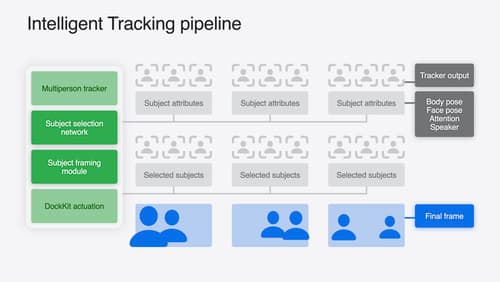

Discover how intelligent tracking in DockKit allows for smoother transitions between subjects. We will cover what intelligent tracking is, how it uses an ML model to select and track subjects, and how you can use it in your app.

Design great visionOS apps

Find out how to create compelling spatial computing apps by embracing immersion, designing for eyes and hands, and taking advantage of depth, scale, and space. We’ll share several examples of great visionOS apps and explore how their designers approached creating new experiences for the platform.

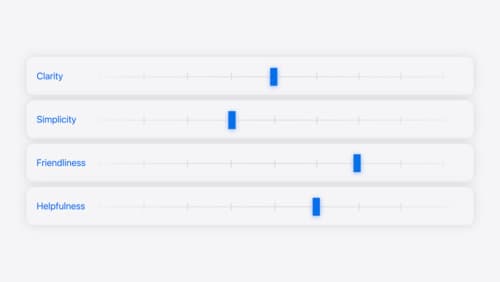

Add personality to your app through UX writing

Every app has a personality that comes across in what you say — and how you say it. Learn how to define your app’s voice and modulate your tone for every situation, from celebratory notifications to error messages. We’ll help you get specific about your app’s purpose and audience and practice writing in different tones.