how do i create a combine publisher

Asked on 2024-08-02

1 search

To create a Combine publisher, you can use the Publisher protocol provided by the Combine framework. Here is a basic example of how to create a simple publisher that emits a sequence of integers:

import Combine

// Create a simple publisher that emits a sequence of integers

let myPublisher = [1, 2, 3, 4, 5].publisher

// Subscribe to the publisher

let subscription = myPublisher.sink { value in

print("Received value: \(value)")

}

In this example, the publisher property of the array creates a publisher that emits each element of the array in sequence. The sink method is used to subscribe to the publisher and handle the emitted values.

For more advanced use cases, you can create custom publishers by conforming to the Publisher protocol. Here is an example of a custom publisher that emits a single value after a delay:

import Combine

import Foundation

struct DelayedPublisher: Publisher {

typealias Output = String

typealias Failure = Never

let value: String

let delay: TimeInterval

func receive<S>(subscriber: S) where S : Subscriber, DelayedPublisher.Failure == S.Failure, DelayedPublisher.Output == S.Input {

let subscription = DelayedSubscription(subscriber: subscriber, value: value, delay: delay)

subscriber.receive(subscription: subscription)

}

private class DelayedSubscription<S: Subscriber>: Subscription where S.Input == String, S.Failure == Never {

private var subscriber: S?

private let value: String

private let delay: TimeInterval

init(subscriber: S, value: String, delay: TimeInterval) {

self.subscriber = subscriber

self.value = value

self.delay = delay

DispatchQueue.main.asyncAfter(deadline: .now() + delay) { [weak self] in

_ = self?.subscriber?.receive(value)

self?.subscriber?.receive(completion: .finished)

}

}

func request(_ demand: Subscribers.Demand) {

// We ignore demand in this simple example

}

func cancel() {

subscriber = nil

}

}

}

// Usage

let delayedPublisher = DelayedPublisher(value: "Hello, Combine!", delay: 2.0)

let delayedSubscription = delayedPublisher.sink { value in

print("Received value: \(value)")

}

In this custom publisher example, DelayedPublisher emits a string value after a specified delay. The DelayedSubscription class handles the delay and emits the value to the subscriber.

For more detailed information on Combine and creating custom publishers, you might want to check out the relevant sessions from WWDC, such as "What’s new in Xcode 16" which covers updates in the build process and debugging that might be useful when working with Combine.

Here are some relevant sessions that might be of interest:

- What’s new in Xcode 16 - Adopting Swift 6 data-race safety guarantees

- Demystify explicitly built modules - Using modules

If you need more specific information or examples, feel free to ask!

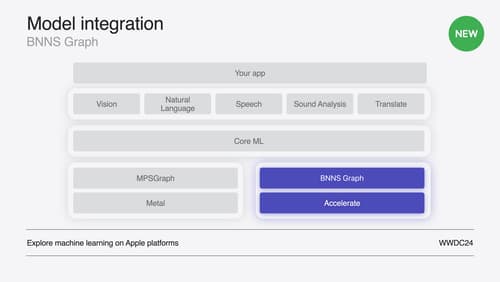

Support real-time ML inference on the CPU

Discover how you can use BNNSGraph to accelerate the execution of your machine learning model on the CPU. We will show you how to use BNNSGraph to compile and execute a machine learning model on the CPU and share how it provides real-time guarantees such as no runtime memory allocation and single-threaded running for audio or signal processing models.

Deploy machine learning and AI models on-device with Core ML

Learn new ways to optimize speed and memory performance when you convert and run machine learning and AI models through Core ML. We’ll cover new options for model representations, performance insights, execution, and model stitching which can be used together to create compelling and private on-device experiences.

Enhance the immersion of media viewing in custom environments

Extend your media viewing experience using Reality Composer Pro components like Docking Region, Reverb, and Virtual Environment Probe. Find out how to further enhance immersion using Reflections, Tint Surroundings Effect, SharePlay, and the Immersive Environment Picker.