How did this site get trained

Asked on 2024-08-02

1 search

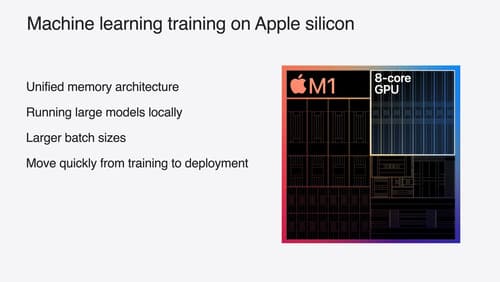

The training process for the model described in the session "Train your machine learning and AI models on Apple GPUs" involves several steps:

-

Model and Tokenizer Setup:

- The process begins by importing necessary libraries such as

torchand setting up the random seeds for reproducibility. - The model and tokenizer are downloaded and set up using the Transformers library from Hugging Face. In this case, the OpenLlama version two with 3 billion parameters is used as the base model.

- The process begins by importing necessary libraries such as

-

Data Selection and Preparation:

- The tiny Shakespeare dataset from Andre Karpathy is selected as the training input. This dataset consists of works of Shakespeare concatenated into a single file.

- The dataset is loaded into a dataset object, and a tokenizer is specified for the data.

-

Training Configuration:

- Training parameters such as batch size and the number of training epochs are set.

- A training class is used to form the training batches for the objects.

-

Fine-Tuning:

- A fine-tuning adapter is attached to the model using the

peftlibrary with the Lora config. - The model is then sent to the computing device for training.

- A fine-tuning adapter is attached to the model using the

-

Training Execution:

- The training is kicked off using the trainer class, which processes the dataset over multiple epochs (in this case, ten epochs).

-

Evaluation and Saving:

- After training, the model's output is evaluated to see the improvements.

- The fine-tuned model is saved, merging the adapter and base model into a single entity for easier use. The tokenizer is also stored with the model.

-

Deployment:

- For deployment, the preferred method is using Core ML, but in this case, the new ExecuTorch framework is used to deploy the model within the PyTorch ecosystem.

For more details, you can refer to the session Train your machine learning and AI models on Apple GPUs (07:22).

Relevant Sessions

Platforms State of the Union

Discover the newest advancements on Apple platforms.

Deploy machine learning and AI models on-device with Core ML

Learn new ways to optimize speed and memory performance when you convert and run machine learning and AI models through Core ML. We’ll cover new options for model representations, performance insights, execution, and model stitching which can be used together to create compelling and private on-device experiences.

Train your machine learning and AI models on Apple GPUs

Learn how to train your models on Apple Silicon with Metal for PyTorch, JAX and TensorFlow. Take advantage of new attention operations and quantization support for improved transformer model performance on your devices.