How should I encode audio buffers for transmit over the wire

Asked on 2024-08-02

1 search

To encode audio buffers for transmission over the wire, you can follow the guidelines and techniques discussed in the sessions from WWDC 2024. Here are some relevant points:

-

Real-time Processing:

- When working with real-time audio processing, it's crucial to avoid memory allocations or multithreading during the execution phase to prevent context switches into kernel code, which can defeat real-time deadlines. This is discussed in the session Support real-time ML inference on the CPU.

-

Audio Unit Configuration:

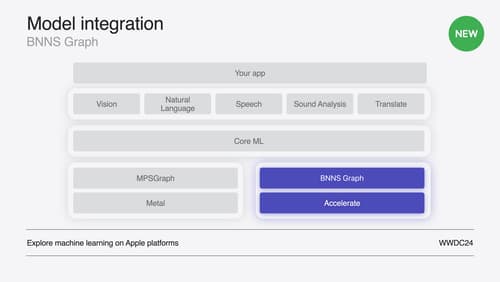

- You can create an audio unit project that adopts BNNS Graph for processing. Xcode provides a template for creating an audio unit extension app. This is useful for tasks such as bitcrushing or quantizing audio to give a distorted effect. More details can be found in the session Support real-time ML inference on the CPU.

-

Handling Audio Buffers:

- When working directly with audio buffers, ensure that the context arguments are pointers to avoid unnecessary memory allocations. This is important for maintaining real-time performance. This is covered in the session Support real-time ML inference on the CPU.

-

Streaming and Decoding:

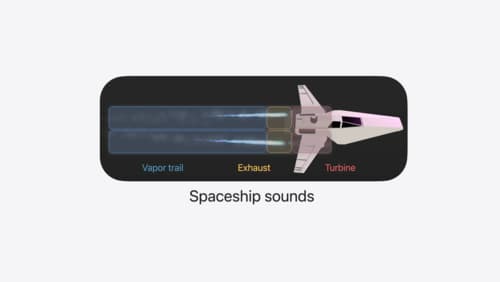

- For applications that require streaming audio data, you can set the loading strategy to stream, which will decode the audio data in real-time, reducing memory usage but potentially adding latency. This is discussed in the session Enhance your spatial computing app with RealityKit audio.

-

Recording and Saving Audio:

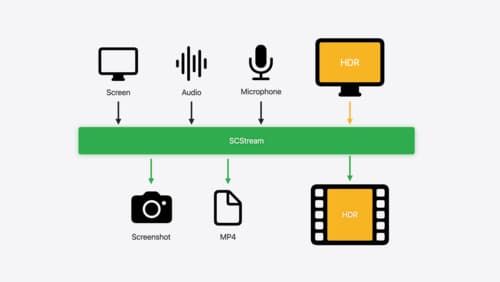

- If you need to record and save audio, you can use the new API added to SCStream, which allows you to configure the recording output, specify the file type, and handle recording events. This is detailed in the session Capture HDR content with ScreenCaptureKit.

Relevant Sessions

- Support real-time ML inference on the CPU

- Enhance your spatial computing app with RealityKit audio

- Capture HDR content with ScreenCaptureKit

These sessions provide comprehensive insights into handling audio buffers, real-time processing, and recording audio, which are essential for encoding audio buffers for transmission.

Capture HDR content with ScreenCaptureKit

Learn how to capture high dynamic colors using ScreenCaptureKit, and explore new features like HDR support, microphone capture, and straight-to-file recording.

Support real-time ML inference on the CPU

Discover how you can use BNNSGraph to accelerate the execution of your machine learning model on the CPU. We will show you how to use BNNSGraph to compile and execute a machine learning model on the CPU and share how it provides real-time guarantees such as no runtime memory allocation and single-threaded running for audio or signal processing models.

Enhance your spatial computing app with RealityKit audio

Elevate your spatial computing experience using RealityKit audio. Discover how spatial audio can make your 3D immersive experiences come to life. From ambient audio, reverb, to real-time procedural audio that can add character to your 3D content, learn how RealityKit audio APIs can help make your app more engaging.