How to crop a video file given a start and end timestamp?

Asked on 2024-08-02

1 search

To crop a video file given a start and end timestamp, you can use the AVFoundation framework in iOS. Here’s a high-level overview of how you can achieve this:

-

Create an AVAsset: Load your video file into an

AVAsset. -

Create an AVAssetExportSession: Use this to export the cropped video.

-

Set the Time Range: Define the start and end times for the crop.

-

Export the Video: Perform the export operation.

Here’s a sample code snippet to illustrate these steps:

import AVFoundation

// Load the video file into an AVAsset

let videoURL = URL(fileURLWithPath: "path/to/your/video.mp4")

let asset = AVAsset(url: videoURL)

// Create an AVAssetExportSession

guard let exportSession = AVAssetExportSession(asset: asset, presetName: AVAssetExportPresetHighestQuality) else {

fatalError("Failed to create export session")

}

// Set the output URL for the cropped video

let outputURL = URL(fileURLWithPath: "path/to/your/cropped_video.mp4")

exportSession.outputURL = outputURL

exportSession.outputFileType = .mp4

// Define the time range for cropping

let startTime = CMTime(seconds: 10, preferredTimescale: 600) // Start at 10 seconds

let endTime = CMTime(seconds: 20, preferredTimescale: 600) // End at 20 seconds

let timeRange = CMTimeRange(start: startTime, end: endTime)

exportSession.timeRange = timeRange

// Perform the export

exportSession.exportAsynchronously {

switch exportSession.status {

case .completed:

print("Export completed successfully")

case .failed:

if let error = exportSession.error {

print("Export failed with error: \(error.localizedDescription)")

}

case .cancelled:

print("Export cancelled")

default:

break

}

}

This code will crop the video between the specified start and end times and save it to the specified output URL.

For more detailed information on working with AVFoundation, you can refer to the session Build compelling spatial photo and video experiences from WWDC 2024.

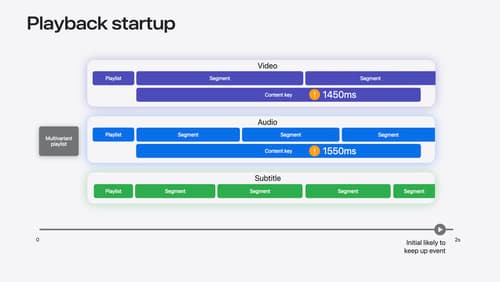

Discover media performance metrics in AVFoundation

Discover how you can monitor, analyze, and improve user experience with the new media performance APIs. Explore how to monitor AVPlayer performance for HLS assets using different AVMetricEvents, and learn how to use these metrics to understand and triage player performance issues.

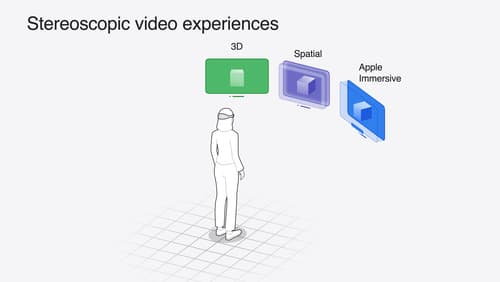

Build compelling spatial photo and video experiences

Learn how to adopt spatial photos and videos in your apps. Explore the different types of stereoscopic media and find out how to capture spatial videos in your iOS app on iPhone 15 Pro. Discover the various ways to detect and present spatial media, including the new QuickLook Preview Application API in visionOS. And take a deep dive into the metadata and stereo concepts that make a photo or video spatial.

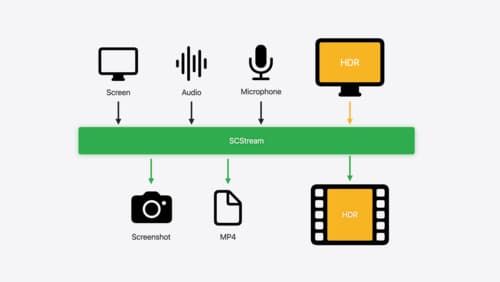

Capture HDR content with ScreenCaptureKit

Learn how to capture high dynamic colors using ScreenCaptureKit, and explore new features like HDR support, microphone capture, and straight-to-file recording.