Avaudiosession

Asked on 2024-08-03

2 searches

The context provided does not mention AVAudioSession directly. However, it does cover various aspects of audio processing and spatial audio in the session titled "Enhance your spatial computing app with RealityKit audio" from WWDC 2024.

For more detailed information on audio processing and spatial audio, you might want to check out the following chapters from the session:

If you have specific questions about AVAudioSession, you might want to look for sessions or documentation specifically covering AVFoundation or audio session management in iOS.

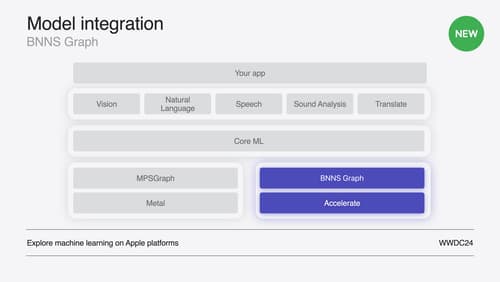

Support real-time ML inference on the CPU

Discover how you can use BNNSGraph to accelerate the execution of your machine learning model on the CPU. We will show you how to use BNNSGraph to compile and execute a machine learning model on the CPU and share how it provides real-time guarantees such as no runtime memory allocation and single-threaded running for audio or signal processing models.

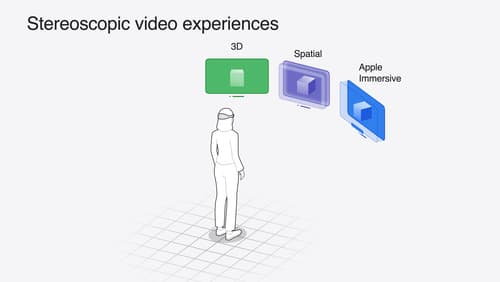

Build compelling spatial photo and video experiences

Learn how to adopt spatial photos and videos in your apps. Explore the different types of stereoscopic media and find out how to capture spatial videos in your iOS app on iPhone 15 Pro. Discover the various ways to detect and present spatial media, including the new QuickLook Preview Application API in visionOS. And take a deep dive into the metadata and stereo concepts that make a photo or video spatial.

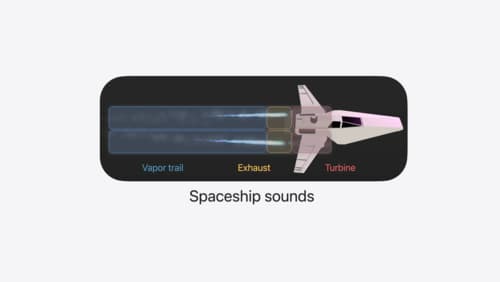

Enhance your spatial computing app with RealityKit audio

Elevate your spatial computing experience using RealityKit audio. Discover how spatial audio can make your 3D immersive experiences come to life. From ambient audio, reverb, to real-time procedural audio that can add character to your 3D content, learn how RealityKit audio APIs can help make your app more engaging.