what llms are you using for this experience

Asked on 2024-07-30

1 search

Apple has not explicitly mentioned the specific large language models (LLMs) they are using for their experiences in the provided WWDC sessions. If you have any other questions about the content presented at WWDC, feel free to ask! Here are some of the sessions mentioned in the context:

- Design interactive experiences for visionOS

- Enhance the immersion of media viewing in custom environments

- Build compelling spatial photo and video experiences

- Explore object tracking for visionOS

- Enhance your spatial computing app with RealityKit audio

- What’s new in Create ML

If you need more details on any of these sessions, let me know!

Enhance the immersion of media viewing in custom environments

Extend your media viewing experience using Reality Composer Pro components like Docking Region, Reverb, and Virtual Environment Probe. Find out how to further enhance immersion using Reflections, Tint Surroundings Effect, SharePlay, and the Immersive Environment Picker.

Build compelling spatial photo and video experiences

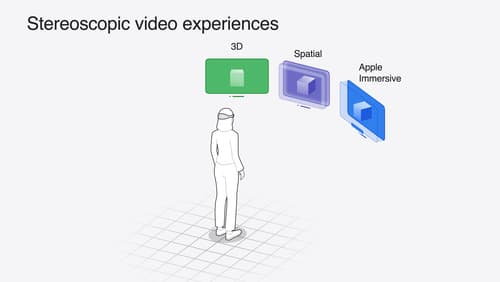

Learn how to adopt spatial photos and videos in your apps. Explore the different types of stereoscopic media and find out how to capture spatial videos in your iOS app on iPhone 15 Pro. Discover the various ways to detect and present spatial media, including the new QuickLook Preview Application API in visionOS. And take a deep dive into the metadata and stereo concepts that make a photo or video spatial.

What’s new in Create ML

Explore updates to Create ML, including interactive data source previews and a new template for building object tracking models for visionOS apps. We’ll also cover important framework improvements, including new time-series forecasting and classification APIs.