what is observation tracking

Asked on 2024-07-30

1 search

Observation tracking, as discussed in the context of WWDC sessions, primarily refers to the ability to track and analyze subjects or objects within a scene using advanced algorithms and machine learning. Here are some key points from the sessions:

-

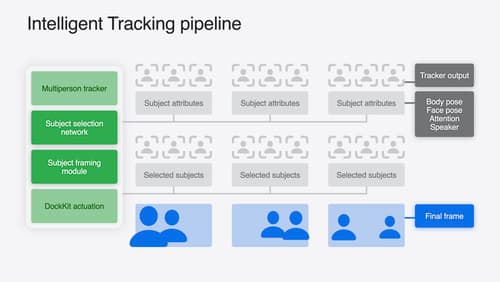

Intelligent Subject Tracking:

- Session: What’s new in DockKit

- Description: Intelligent subject tracking uses advanced algorithms and machine learning to analyze a scene in real-time. It identifies main subjects, such as individual faces and objects, and determines the most relevant person to track based on factors like movement, speech, and proximity to the camera. This allows for smooth and seamless tracking without manual intervention.

-

Tracking Summary:

- Session: What’s new in DockKit

- Description: The tracking state includes a time when the state was captured and a list of tracked subjects, which can be either people or objects. Tracked subjects have identifiers, face rectangles, and saliency ranks. For people, additional attributes like speaking confidence and looking at the camera confidence are provided.

-

Object Tracking in Create ML:

- Session: What’s new in Create ML

- Description: Create ML has introduced a new template for building machine learning models to track the spatial location and orientation of objects. This involves using a 3D asset of the object to generate training data, which can then be used to track the object in real-world applications.

-

Known Object Tracking in VisionOS:

- Session: Introducing enterprise APIs for visionOS

- Description: VisionOS 2.0 includes enhanced known object tracking, allowing apps to detect and track specific reference objects within their viewing area. This feature can be tuned and optimized for various use cases, such as tracking tools and parts in a complex repair environment.

-

Object Tracking with ARKit:

- Session: Create enhanced spatial computing experiences with ARKit

- Description: ARKit can track the position and orientation of objects to anchor virtual content to them. This involves creating reference objects from 3D models and using them to track physical items in real-time.

For more detailed information, you can refer to the specific sessions mentioned above.

What’s new in Create ML

Explore updates to Create ML, including interactive data source previews and a new template for building object tracking models for visionOS apps. We’ll also cover important framework improvements, including new time-series forecasting and classification APIs.

Create enhanced spatial computing experiences with ARKit

Learn how to create captivating immersive experiences with ARKit’s latest features. Explore ways to use room tracking and object tracking to further engage with your surroundings. We’ll also share how your app can react to changes in your environment’s lighting on this platform. Discover improvements in hand tracking and plane detection which can make your spatial experiences more intuitive.

What’s new in DockKit

Discover how intelligent tracking in DockKit allows for smoother transitions between subjects. We will cover what intelligent tracking is, how it uses an ML model to select and track subjects, and how you can use it in your app.