How do I do ar navigation

Asked on 2024-08-05

1 search

To implement AR navigation, you can leverage several tools and APIs presented at WWDC. Here are some key sessions and concepts that can help you get started:

-

ARKit and RealityKit:

- ARKit: Provides robust world tracking, plane detection, and object tracking capabilities. You can use ARKit to create anchors that represent positions and orientations in 3D space.

- RealityKit: Offers APIs to create and manage 3D content, including spatial tracking and hand tracking.

-

Sessions to Watch:

- Create enhanced spatial computing experiences with ARKit: This session covers improvements in scene understanding, room tracking, plane detection, and object tracking. It also explains how ARKit's world tracking has become more robust for various lighting conditions.

- Discover RealityKit APIs for iOS, macOS and visionOS: This session introduces the new spatial tracking API, which simplifies the task of adding custom hand tracking to your app. It also covers how to use world tracking with a camera feed as the background.

- Explore object tracking for visionOS: This session demonstrates how to use object tracking to get the position and orientation of real-world items and augment them with virtual content.

-

Key Concepts:

- Anchors: Use ARKit to create anchors that represent positions and orientations in 3D space. These anchors can be used to position virtual content relative to real-world objects.

- Hand Tracking: RealityKit's new spatial tracking API allows you to track hand movements and use them as input for your AR navigation experience.

- Object Tracking: Use ARKit's object tracking capabilities to detect and track real-world objects, which can then be augmented with virtual content.

By combining these tools and techniques, you can create an immersive AR navigation experience that leverages the latest advancements in ARKit and RealityKit.

Bring your iOS or iPadOS game to visionOS

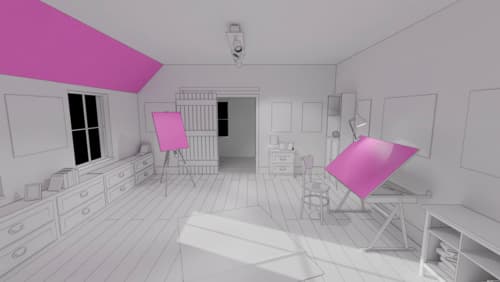

Discover how to transform your iOS or iPadOS game into a uniquely visionOS experience. Increase the immersion (and fun factor!) with a 3D frame or an immersive background. And invite players further into your world by adding depth to the window with stereoscopy or head tracking.

Create enhanced spatial computing experiences with ARKit

Learn how to create captivating immersive experiences with ARKit’s latest features. Explore ways to use room tracking and object tracking to further engage with your surroundings. We’ll also share how your app can react to changes in your environment’s lighting on this platform. Discover improvements in hand tracking and plane detection which can make your spatial experiences more intuitive.

Build a spatial drawing app with RealityKit

Harness the power of RealityKit through the process of building a spatial drawing app. As you create an eye-catching spatial experience that integrates RealityKit with ARKit and SwiftUI, you’ll explore how resources work in RealityKit and how to use features like low-level mesh and texture APIs to achieve fast updates of the users’ brush strokes.