accelerate matrix

Asked on 2024-08-06

1 search

The term "accelerate matrix" likely refers to the use of Apple's Accelerate framework, which includes optimized mathematical functions for high-performance computing tasks, including matrix operations. Here are some relevant details from the WWDC sessions:

-

Accelerate Framework for Machine Learning:

- The Accelerate framework includes BNNs Graph, a new API designed to optimally run machine learning models on the CPU. This API has significantly improved performance over the older BNN kernel-based API and works with Core ML models to enable real-time and latency-sensitive inference. This is mentioned in the session Explore machine learning on Apple platforms.

-

Matrix Multiplications in Transformer Models:

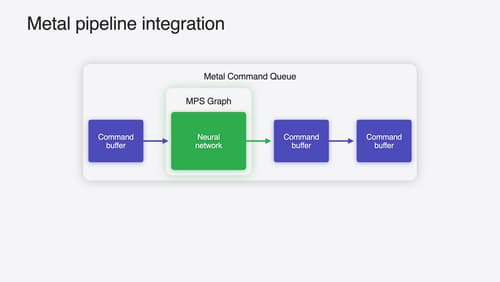

- Transformer models, which are made up of layers of transformer blocks, involve large multi-dimensional matrix multiplications. These operations are compute-heavy and are optimized using the MPS Graph in Metal. This is discussed in the session Accelerate machine learning with Metal.

-

Core ML and Metal Performance Shaders:

- For apps with heavy workloads, Metal provides ways to sequence machine learning tasks with other workloads using Metal Performance Shaders, which can help manage overall performance. This is highlighted in the session Platforms State of the Union.

Relevant Sessions

- Deploy machine learning and AI models on-device with Core ML

- Explore machine learning on Apple platforms

- Train your machine learning and AI models on Apple GPUs

- Accelerate machine learning with Metal

- Platforms State of the Union

If you need more specific details or timestamps, please let me know!

Deploy machine learning and AI models on-device with Core ML

Learn new ways to optimize speed and memory performance when you convert and run machine learning and AI models through Core ML. We’ll cover new options for model representations, performance insights, execution, and model stitching which can be used together to create compelling and private on-device experiences.

Platforms State of the Union

Discover the newest advancements on Apple platforms.

Accelerate machine learning with Metal

Learn how to accelerate your machine learning transformer models with new features in Metal Performance Shaders Graph. We’ll also cover how to improve your model’s compute bandwidth and quality, and visualize it in the all new MPSGraph viewer.