How does the updated Vision Pro SDK work for creating AR experiences?

Asked on 2024-08-06

1 search

The updated Vision Pro SDK offers several new features and improvements for creating AR experiences. Here are some key highlights:

-

Hand Interaction: You can now decide if you want the user's hands to appear in front of or behind the content, providing more creative control over the app experience. This is detailed in the Platforms State of the Union session.

-

Enhanced Scene Understanding: The SDK has significantly extended the fidelity of scene understanding capabilities. Planes can now be detected in all orientations, allowing for anchoring objects on various surfaces. Additionally, room anchors consider the user's surroundings on a per-room basis, and you can detect a user's movement across rooms. This is also covered in the Platforms State of the Union session.

-

Object Tracking API: A new object tracking API for visionOS allows you to attach content to individual objects found around the user. This can be used to attach virtual content like instructions to physical objects, adding new dimensions of interactivity. More details can be found in the Explore object tracking for visionOS session.

-

ARKit Enhancements: The ARKit framework has received several updates, including improvements in room tracking, plane detection, and hand tracking. These updates help customize experiences based on the room and enhance interaction with the surroundings. This is discussed in the Create enhanced spatial computing experiences with ARKit session.

-

Integration with Existing Frameworks: VisionOS is built on the foundation of macOS, iOS, and iPadOS, meaning many tools and frameworks are common across these platforms. SwiftUI, RealityKit, and ARKit are core to developing spatial apps for visionOS. If your app already uses these frameworks, you are well on your way to creating a great spatial computing app. This is highlighted in the Platforms State of the Union session.

For more detailed information, you can refer to the following sessions:

- Platforms State of the Union

- Create enhanced spatial computing experiences with ARKit

- Explore object tracking for visionOS

These sessions provide comprehensive insights into the new capabilities and how to leverage them for creating immersive AR experiences.

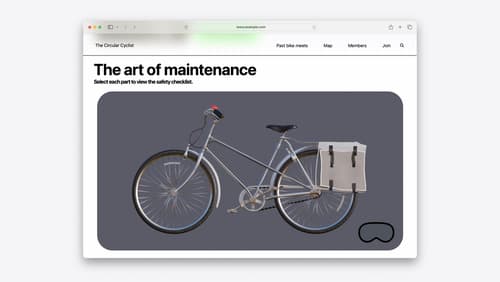

Explore object tracking for visionOS

Find out how you can use object tracking to turn real-world objects into virtual anchors in your visionOS app. Learn how you can build spatial experiences with object tracking from start to finish. Find out how to create a reference object using machine learning in Create ML and attach content relative to your target object in Reality Composer Pro, RealityKit or ARKit APIs.

Optimize for the spatial web

Discover how to make the most of visionOS capabilities on the web. Explore recent updates like improvements to selection highlighting, and the ability to present spatial photos and panorama images in fullscreen. Learn to take advantage of existing web standards for dictation and text-to-speech with WebSpeech, spatial soundscapes with WebAudio, and immersive experiences with WebXR.

Design great visionOS apps

Find out how to create compelling spatial computing apps by embracing immersion, designing for eyes and hands, and taking advantage of depth, scale, and space. We’ll share several examples of great visionOS apps and explore how their designers approached creating new experiences for the platform.