what's the difference between avkit and corevideo?

Asked on 2024-08-06

1 search

The primary difference between AVKit and CoreVideo lies in their purposes and functionalities within the Apple ecosystem:

-

AVKit:

- Purpose: AVKit is a high-level framework designed to simplify the playback of audio and video content. It provides a user interface and integrates with other system features to offer a seamless media playback experience.

- Key Features:

- AVPlayerViewController: A view controller that manages the playback of video content, offering features like Multiview for simultaneous viewing of multiple video streams (Explore multiview video playback in visionOS).

- Integration: Works with AVFoundation and RealityKit to handle 2D and 3D content, including spatial audio and video (Explore multiview video playback in visionOS).

- Customization: Allows developers to create custom video experiences and supports features like HTTP live streaming (Build compelling spatial photo and video experiences).

-

CoreVideo:

- Purpose: CoreVideo is a lower-level framework that provides a pipeline model for managing and manipulating video frames. It is more focused on the efficient handling of video data and synchronization.

- Key Features:

- Buffer Management: Manages video buffers and provides services for manipulating video frames.

- Synchronization: Works closely with other frameworks like CoreMedia and CoreAnimation to ensure smooth video playback and processing.

In summary, AVKit is geared towards providing a high-level, user-friendly interface for media playback, integrating seamlessly with other Apple frameworks to enhance the viewing experience. CoreVideo, on the other hand, is more focused on the low-level management and processing of video data, providing the necessary tools for efficient video frame handling and synchronization.

Explore multiview video playback in visionOS

Learn how AVExperienceController can enable playback of multiple videos on Apple Vision Pro. Review best practices for adoption and explore great use cases, like viewing a sports broadcast from different angles or watching multiple games simultaneously. And discover how to design a compelling and intuitive multiview experience in your app.

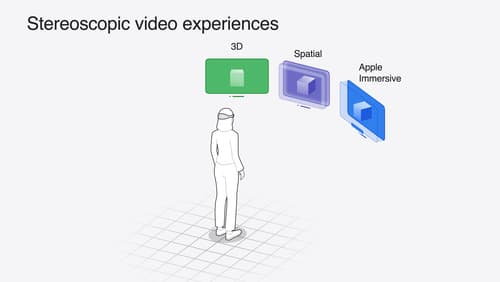

Build compelling spatial photo and video experiences

Learn how to adopt spatial photos and videos in your apps. Explore the different types of stereoscopic media and find out how to capture spatial videos in your iOS app on iPhone 15 Pro. Discover the various ways to detect and present spatial media, including the new QuickLook Preview Application API in visionOS. And take a deep dive into the metadata and stereo concepts that make a photo or video spatial.