combine을 공부하려면 뭘봐야해? 한국말로 대답해줘

Asked on 2024-08-07

1 search

Combine을 공부하려면 WWDC에서 제공된 여러 세션을 참고할 수 있습니다. 특히, 다음 세션들이 도움이 될 것입니다:

-

Platforms State of the Union: 이 세션에서는 Apple의 새로운 기술과 도구들에 대한 전반적인 개요를 제공합니다. Combine과 관련된 내용도 포함될 수 있습니다.

-

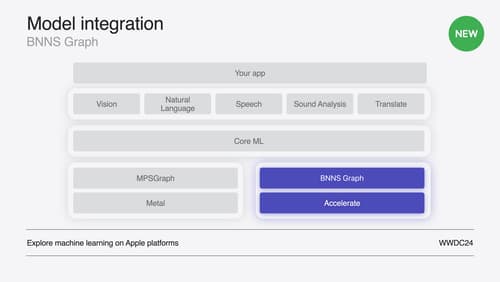

Support real-time ML inference on the CPU: 이 세션에서는 BNNS Graph를 소개하고, Swift와 SwiftUI를 사용하여 실시간 처리에 대해 설명합니다. Combine을 사용한 데이터 처리와 관련된 내용이 포함될 수 있습니다.

-

Deploy machine learning and AI models on-device with Core ML: 이 세션에서는 Core ML을 사용하여 기기 내에서 머신러닝 모델을 배포하는 방법을 다룹니다. Combine을 사용하여 모델의 입력과 출력을 처리하는 방법에 대한 내용이 포함될 수 있습니다.

이 세션들을 통해 Combine을 어떻게 활용할 수 있는지에 대한 다양한 예제와 실습을 확인할 수 있습니다.

Platforms State of the Union

Discover the newest advancements on Apple platforms.

What’s new in Create ML

Explore updates to Create ML, including interactive data source previews and a new template for building object tracking models for visionOS apps. We’ll also cover important framework improvements, including new time-series forecasting and classification APIs.

Support real-time ML inference on the CPU

Discover how you can use BNNSGraph to accelerate the execution of your machine learning model on the CPU. We will show you how to use BNNSGraph to compile and execute a machine learning model on the CPU and share how it provides real-time guarantees such as no runtime memory allocation and single-threaded running for audio or signal processing models.