Reality composer

Asked on 2024-08-07

1 search

Reality Composer Pro was a significant focus at WWDC 2024, with multiple sessions highlighting its capabilities and new features. Here are some key points:

-

Creating Custom Environments:

- In the session Create custom environments for your immersive apps in visionOS, Reality Composer Pro is used to design, create, and optimize environments for visionOS. This includes adding elements like video components to 3D scenes.

-

Object Tracking:

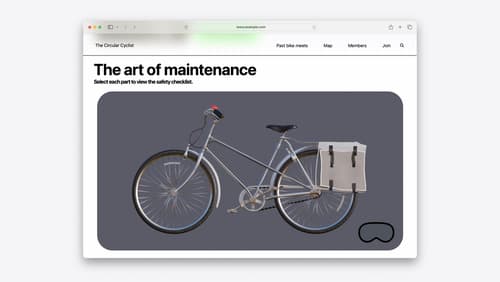

- The session Explore object tracking for visionOS demonstrates how to use Reality Composer Pro to facilitate object tracking by creating a new Xcode project and setting up an object anchor.

-

Media Viewing Immersion:

- In Enhance the immersion of media viewing in custom environments, Reality Composer Pro is used to prepare 3D content and visualize experiences, such as adding a video dock preset to a project.

-

Interactive 3D Content:

- The session Compose interactive 3D content in Reality Composer Pro introduces new features like timelines, which provide an interactive way to animate 3D content.

-

Optimizing 3D Assets:

- In Discover area mode for Object Capture, Reality Composer Pro is used to combine and edit 3D assets, making them ready for Apple Vision Pro.

-

Debugging with RealityKit:

- The session Break into the RealityKit debugger shows how to use the RealityKit debugger to capture and explore 3D snapshots of running apps, which can be useful for debugging Reality Composer Pro projects.

-

Cross-Platform Capabilities:

- The Platforms State of the Union session highlights that Reality Composer Pro and RealityKit APIs are now aligned across macOS, iOS, and iPadOS, making it easier to build spatial apps for multiple platforms.

For more detailed information, you can refer to the specific sessions mentioned above.

Optimize for the spatial web

Discover how to make the most of visionOS capabilities on the web. Explore recent updates like improvements to selection highlighting, and the ability to present spatial photos and panorama images in fullscreen. Learn to take advantage of existing web standards for dictation and text-to-speech with WebSpeech, spatial soundscapes with WebAudio, and immersive experiences with WebXR.

Enhance the immersion of media viewing in custom environments

Extend your media viewing experience using Reality Composer Pro components like Docking Region, Reverb, and Virtual Environment Probe. Find out how to further enhance immersion using Reflections, Tint Surroundings Effect, SharePlay, and the Immersive Environment Picker.

Discover RealityKit APIs for iOS, macOS and visionOS

Learn how new cross-platform APIs in RealityKit can help you build immersive apps for iOS, macOS, and visionOS. Check out the new hover effects, lights and shadows, and portal crossing features, and view them in action through real examples.