How do I get started to make transcription feature in my app?

Asked on 2024-08-07

1 search

To get started with adding a transcription feature to your app, you can leverage Apple's machine learning APIs, specifically those that handle speech-to-text conversion. Here are some steps and resources to help you:

-

Explore Machine Learning APIs: Apple provides several frameworks that can help you with speech recognition and transcription. You can start by exploring the Vision framework and other related APIs that allow you to convert speech to text. For a detailed overview, you can refer to the session Explore machine learning on Apple platforms.

-

Speech Recognition Implementation: To implement speech recognition, you can use the Speech framework. This framework allows you to transcribe speech in real-time or from audio files. Here's a brief overview of how you can set it up:

- Request Authorization: Before using the speech recognizer, you need to request authorization from the user.

- Create a Speech Recognizer: Initialize a

SFSpeechRecognizerobject. - Create a Recognition Request: Use

SFSpeechAudioBufferRecognitionRequestfor real-time recognition orSFSpeechURLRecognitionRequestfor recognizing speech from an audio file. - Start Recognition: Use the recognizer to start the recognition task and handle the results.

For a practical example, you can refer to the session Optimize for the spatial web, which discusses how to handle speech recognition results and use them in your app.

-

Using CreateML for Custom Models: If you need more customized transcription capabilities, you can use CreateML to train your own models. CreateML allows you to customize models with your own data, which can be particularly useful if you have specific requirements for your transcription feature. For more information, check out the session Explore machine learning on Apple platforms.

By following these steps and utilizing the provided resources, you should be able to integrate a robust transcription feature into your app. If you need more detailed guidance, you can refer to the specific sessions mentioned above for in-depth explanations and code examples.

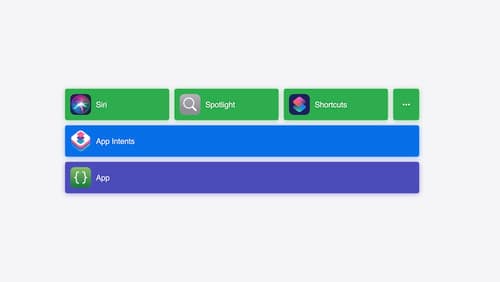

Bring your app’s core features to users with App Intents

Learn the principles of the App Intents framework, like intents, entities, and queries, and how you can harness them to expose your app’s most important functionality right where people need it most. Find out how to build deep integration between your app and the many system features built on top of App Intents, including Siri, controls and widgets, Apple Pencil, Shortcuts, the Action button, and more. Get tips on how to build your App Intents integrations efficiently to create the best experiences in every surface while still sharing code and core functionality.

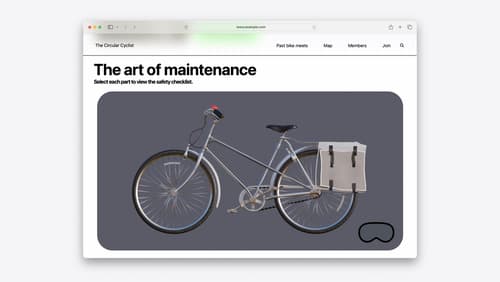

Optimize for the spatial web

Discover how to make the most of visionOS capabilities on the web. Explore recent updates like improvements to selection highlighting, and the ability to present spatial photos and panorama images in fullscreen. Learn to take advantage of existing web standards for dictation and text-to-speech with WebSpeech, spatial soundscapes with WebAudio, and immersive experiences with WebXR.

Explore machine learning on Apple platforms

Get started with an overview of machine learning frameworks on Apple platforms. Whether you’re implementing your first ML model, or an ML expert, we’ll offer guidance to help you select the right framework for your app’s needs.