How do I use UIPasteboard's detectPatterns(for:completionHandler:)?

Asked on 2024-07-30

1 search

To use UIPasteboard's detectPatterns(for:completionHandler:), you can follow these steps:

- Set Up the Request: Create a request to detect patterns in the pasteboard content.

- Perform the Request: Use the

detectPatterns(for:completionHandler:)method to perform the request. - Handle the Results: Process the results in the completion handler.

Here is a basic example in Swift:

import UIKit

let pasteboard = UIPasteboard.general

let patterns: [UIPasteboard.DetectionPattern] = [.number, .link]

pasteboard.detectPatterns(for: patterns) { detectedPatterns, error in

if let error = error {

print("Error detecting patterns: \(error)")

return

}

if let detectedPatterns = detectedPatterns {

for pattern in detectedPatterns {

switch pattern {

case .number:

print("Number detected")

case .link:

print("Link detected")

default:

break

}

}

}

}

In this example:

UIPasteboard.generalis used to access the general pasteboard.detectPatterns(for:completionHandler:)is called with an array of patterns to detect (e.g.,.number,.link).- The completion handler processes the detected patterns or handles any errors.

For more detailed information on using asynchronous APIs and handling completion handlers, you might find the session Go further with Swift Testing (24:51) useful, as it covers converting completion handlers to async/await syntax in Swift.

Go further with Swift Testing

Learn how to write a sweet set of (test) suites using Swift Testing’s baked-in features. Discover how to take the building blocks further and use them to help expand tests to cover more scenarios, organize your tests across different suites, and optimize your tests to run in parallel.

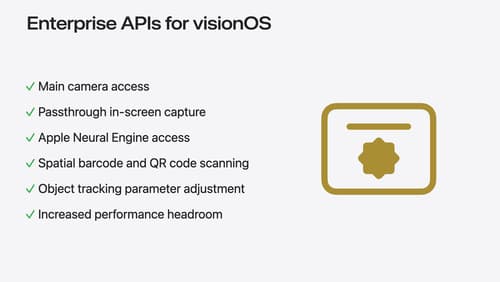

Introducing enterprise APIs for visionOS

Find out how you can use new enterprise APIs for visionOS to create spatial experiences that enhance employee and customer productivity on Apple Vision Pro.

Discover area mode for Object Capture

Discover how area mode for Object Capture enables new 3D capture possibilities on iOS by extending the functionality of Object Capture to support capture and reconstruction of an area. Learn how to optimize the quality of iOS captures using the new macOS sample app for reconstruction, and find out how to view the final results with Quick Look on Apple Vision Pro, iPhone, iPad or Mac. Learn about improvements to 3D reconstruction, including a new API that allows you to create your own custom image processing pipelines.