what is the latest RealityKit additions

Asked on 2024-08-08

1 search

The latest additions to RealityKit, as presented at WWDC 2024, include several significant updates and new features:

-

Cross-Platform Alignment: RealityKit 4 now supports macOS, iOS, and iPadOS alongside visionOS, allowing developers to build for all these platforms simultaneously. This includes features like MaterialX, portals, and particles (Platforms State of the Union).

-

Advanced Character Animation: New APIs for blend shapes, inverse kinematics, and animation timelines have been introduced, enhancing character animation capabilities and enabling dynamic interactions (Platforms State of the Union).

-

Low-Level Access: New APIs for low-level mesh and textures provide improved control over app appearance, working with Metal compute shaders to enable fully dynamic models and textures (Platforms State of the Union).

-

Shader Graph: Support for MaterialX shaders in Shader Graph, a shader creation system within Reality Composer Pro, is now extended to all platforms, ensuring consistent visuals across VisionOS, iOS, iPadOS, and macOS (What’s new in USD and MaterialX).

-

Enhanced Debugging: Xcode's view debugging now supports introspecting 3D scene content, allowing developers to investigate scene object hierarchies and inspect properties (Platforms State of the Union).

-

New Components and Features:

- Billboard Component: Ensures entities always face the user.

- Pixel Cast: Enables pixel-perfect entity selection.

- Subdivision Surface: Renders smooth surfaces without creating a dense mesh (Discover RealityKit APIs for iOS, macOS and visionOS).

-

Hand Tracking: Enhanced hand tracking capabilities, allowing developers to choose between continuous or predicted hand tracking depending on their needs (Create enhanced spatial computing experiences with ARKit).

For a detailed overview of these features, you can refer to the sessions:

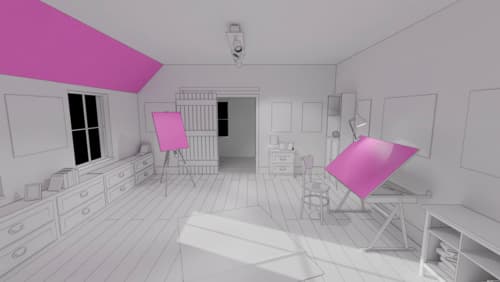

Build a spatial drawing app with RealityKit

Harness the power of RealityKit through the process of building a spatial drawing app. As you create an eye-catching spatial experience that integrates RealityKit with ARKit and SwiftUI, you’ll explore how resources work in RealityKit and how to use features like low-level mesh and texture APIs to achieve fast updates of the users’ brush strokes.

Platforms State of the Union

Discover the newest advancements on Apple platforms.

Create enhanced spatial computing experiences with ARKit

Learn how to create captivating immersive experiences with ARKit’s latest features. Explore ways to use room tracking and object tracking to further engage with your surroundings. We’ll also share how your app can react to changes in your environment’s lighting on this platform. Discover improvements in hand tracking and plane detection which can make your spatial experiences more intuitive.