A foundation and work with camera

Asked on 2024-08-12

1 search

To work with the camera and foundational technologies at WWDC, you can explore several sessions that cover different aspects of camera integration and experiences:

-

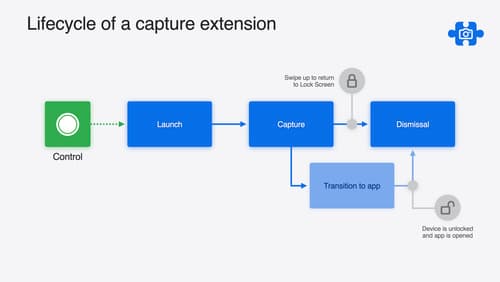

Build a great Lock Screen camera capture experience: This session introduces the Locked Camera Capture framework in iOS 18, which allows developers to create a camera capture experience directly accessible from the lock screen. It covers the lifecycle of a capture extension and how to handle permissions and integrate captured content back into your app. You can start exploring this session with the chapter on Great capture experiences.

-

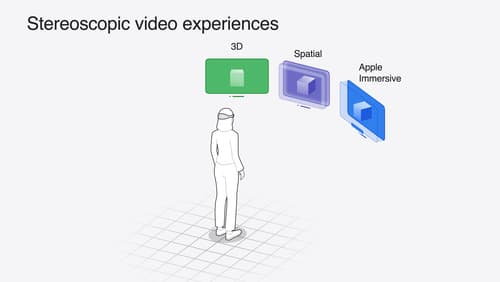

Build compelling spatial photo and video experiences: This session discusses how to use AV Foundation and other APIs to add spatial media capabilities to your apps. It explains how spatial video is recorded using the iPhone 15 Pro's camera setup and provides a deep dive into spatial media formats. You can start with the chapter on Tour of the new APIs.

-

Introducing enterprise APIs for visionOS: This session covers how to access the main camera feed and embed it in an app using visionOS. It includes setting up a camera frame provider and handling user authorization for camera access. You can begin with the chapter on Enhanced sensor access.

These sessions provide a comprehensive overview of how to leverage camera functionalities in various contexts, from lock screen experiences to spatial media and enterprise applications.

Build a great Lock Screen camera capture experience

Find out how the LockedCameraCapture API can help you bring your capture application’s most useful information directly to the Lock Screen. Examine the API’s features and functionality, learn how to get started creating a capture extension, and find out how that extension behaves when the device is locked.

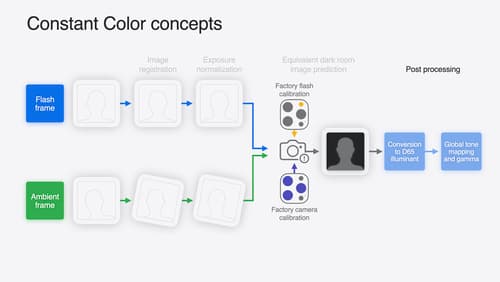

Keep colors consistent across captures

Meet the Constant Color API and find out how it can help people use your app to determine precise colors. You’ll learn how to adopt the API, explore its scientific and marketing potential, and discover best practices for making the most of the technology.

Build compelling spatial photo and video experiences

Learn how to adopt spatial photos and videos in your apps. Explore the different types of stereoscopic media and find out how to capture spatial videos in your iOS app on iPhone 15 Pro. Discover the various ways to detect and present spatial media, including the new QuickLook Preview Application API in visionOS. And take a deep dive into the metadata and stereo concepts that make a photo or video spatial.