How to setup ARKit app?

Asked on 2024-08-12

1 search

To set up an ARKit app, you can follow the guidance provided in the session titled "Build a spatial drawing app with RealityKit" from WWDC 2024. Here's a brief overview of the steps involved:

-

Set Up Spatial Tracking: Begin by setting up spatial tracking so that your app can understand hand and environment data. This involves configuring hand anchors and other spatial tracking features to ensure your app can interact with the real world effectively.

-

Build a User Interface: Develop a user interface that allows users to control elements like brushes and canvases. This UI can be built using SwiftUI and RealityKit to provide a seamless experience.

-

Customize Visuals: Use RealityKit's capabilities to customize meshes, textures, and shaders, allowing for a polished visual design. This can include creating dynamic textures and spatial UI elements.

-

Utilize New Features: Leverage new RealityKit APIs to enhance the app's functionality, such as generating brush geometry with Metal.

For a more detailed walkthrough, you can refer to the session Build a spatial drawing app with RealityKit (02:43) which covers setting up spatial tracking.

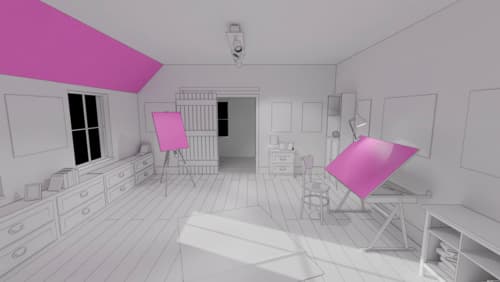

Discover area mode for Object Capture

Discover how area mode for Object Capture enables new 3D capture possibilities on iOS by extending the functionality of Object Capture to support capture and reconstruction of an area. Learn how to optimize the quality of iOS captures using the new macOS sample app for reconstruction, and find out how to view the final results with Quick Look on Apple Vision Pro, iPhone, iPad or Mac. Learn about improvements to 3D reconstruction, including a new API that allows you to create your own custom image processing pipelines.

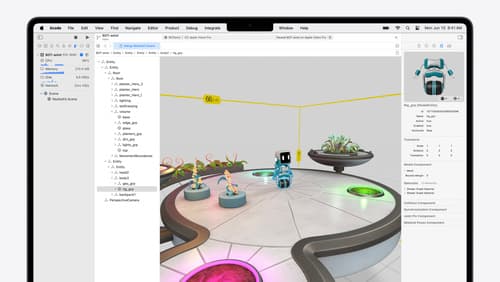

Break into the RealityKit debugger

Meet the RealityKit debugger and discover how this new tool lets you inspect the entity hierarchy of spatial apps, debug rogue transformations, find missing entities, and detect which parts of your code are causing problems for your systems.

Create enhanced spatial computing experiences with ARKit

Learn how to create captivating immersive experiences with ARKit’s latest features. Explore ways to use room tracking and object tracking to further engage with your surroundings. We’ll also share how your app can react to changes in your environment’s lighting on this platform. Discover improvements in hand tracking and plane detection which can make your spatial experiences more intuitive.